autogluon.core.scheduler¶

Example

Define a toy training function with searchable spaces:

>>> import numpy as np

>>> import autogluon.core as ag

>>> @ag.args(

... lr=ag.space.Real(1e-3, 1e-2, log=True),

... wd=ag.space.Real(1e-3, 1e-2),

... epochs=10)

>>> def train_fn(args, reporter):

... print('lr: {}, wd: {}'.format(args.lr, args.wd))

... for e in range(args.epochs):

... dummy_accuracy = 1 - np.power(1.8, -np.random.uniform(e, 2*e))

... reporter(epoch=e+1, accuracy=dummy_accuracy, lr=args.lr, wd=args.wd)

Note that epoch returned by reporter must be the number of epochs done, and start with 1. Create a scheduler and use it to run training jobs:

>>> scheduler = ag.scheduler.HyperbandScheduler(

... train_fn,

... resource={'num_cpus': 2, 'num_gpus': 0},

... num_trials=100,

... reward_attr='accuracy',

... time_attr='epoch',

... grace_period=1,

... reduction_factor=3,

... type='stopping')

>>> scheduler.run()

>>> scheduler.join_jobs()

Note that HyperbandScheduler obtains the maximum number of epochs from train_fn.args.epochs (specified by epochs=10 in the example above in the ag.args decorator). The value can also be passed as max_t to HyperbandScheduler.

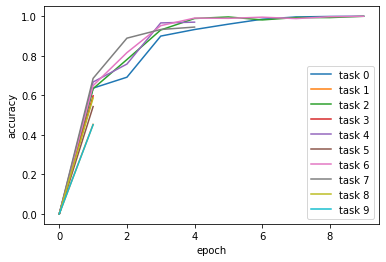

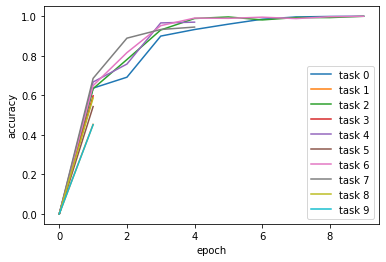

Visualize the results:

>>> scheduler.get_training_curves(plot=True)

Schedulers¶

Simple scheduler that just runs trials in submission order. |

|

Implements different variants of asynchronous Hyperband |

|

Scheduler that uses Reinforcement Learning with a LSTM controller created based on the provided search spaces |

FIFOScheduler¶

-

class

autogluon.core.scheduler.FIFOScheduler(train_fn, **kwargs)[source]¶ Simple scheduler that just runs trials in submission order.

- Parameters

- train_fncallable

A task launch function for training.

- argsobject (optional)

Default arguments for launching train_fn.

- resourcedict

Computation resources. For example, {‘num_cpus’:2, ‘num_gpus’:1}

- searcherstr or BaseSearcher

Searcher (get_config decisions). If str, this is passed to searcher_factory along with search_options.

- search_optionsdict

If searcher is str, these arguments are passed to searcher_factory.

- checkpointstr

If filename given here, a checkpoint of scheduler (and searcher) state is written to file every time a job finishes. Note: May not be fully supported by all searchers.

- resumebool

If True, scheduler state is loaded from checkpoint, and experiment starts from there. Note: May not be fully supported by all searchers.

- num_trialsint

Maximum number of jobs run in experiment. One of num_trials, time_out must be given.

- time_outfloat

If given, jobs are started only until this time_out (wall clock time). Moreover, we also stop jobs after time_out has passed, when they report a result. One of num_trials, time_out must be given.

- reward_attrstr

Name of reward (i.e., metric to maximize) attribute in data obtained from reporter

- constraint_attrstr

Name of constraint attribute in data obtained from reporter for running constrained Bayesian optimization

- time_attrstr

Name of resource (or time) attribute in data obtained from reporter. This attribute is optional for FIFO scheduling, but becomes mandatory in multi-fidelity scheduling (e.g., Hyperband). Note: The type of resource must be int.

- dist_ip_addrslist of str

IP addresses of remote machines.

- training_history_callbackcallable

Callback function called every time a result is added to training_history, if at least training_history_callback_delta_secs seconds passed since the last recent call. See _add_training_result for the signature of this callback function. Use this callback to serialize self.training_history after regular intervals.

- training_history_callback_delta_secsfloat

See training_history_callback.

- training_history_searcher_infobool

If True, information about the current state of the searcher is added to every reported_result before added to training_history. This info includes in particular the current hyperparameters of the surrogate model of the searcher, as well as the dataset size.

- delay_get_configbool

If True, the call to searcher.get_config is delayed until a worker resource for evaluation is available. Otherwise, get_config is called just after a job has been started. For searchers which adapt to past data, True should be preferred. Otherwise, it does not matter.

- stop_jobs_after_time_outbool

Relevant only if time_out is used. If True, jobs which report a metric are stopped once time_out has passed. Otherwise, such jobs are allowed to continue until the end, or until stopped for other reasons. The latter can mean an experiment runs far longer than time_out.

Examples

>>> import numpy as np >>> import autogluon.core as ag >>> @ag.args( ... lr=ag.space.Real(1e-3, 1e-2, log=True), ... wd=ag.space.Real(1e-3, 1e-2)) >>> def train_fn(args, reporter): ... print('lr: {}, wd: {}'.format(args.lr, args.wd)) ... for e in range(10): ... dummy_accuracy = 1 - np.power(1.8, -np.random.uniform(e, 2*e)) ... reporter(epoch=e+1, accuracy=dummy_accuracy, lr=args.lr, wd=args.wd) >>> scheduler = ag.scheduler.FIFOScheduler(train_fn, ... resource={'num_cpus': 2, 'num_gpus': 0}, ... num_trials=20, ... reward_attr='accuracy', ... time_attr='epoch') >>> scheduler.run() >>> scheduler.join_jobs() >>> scheduler.get_training_curves(plot=True)

- Attributes

- num_finished_tasks

Methods

add_job(task, **kwargs)Adding a training task to the scheduler.

add_remote(ip_addrs)Add remote nodes to the scheduler computation resource.

add_task(task, **kwargs)add_task() is now deprecated in favor of add_job().

Get the best configuration from the finished jobs.

Get the best reward from the finished jobs.

Get the task id that results in the best configuration/best reward.

get_training_curves([filename, plot, use_legend])Get Training Curves

join_jobs([timeout])Wait all scheduled jobs to finish

load_state_dict(state_dict)Load from the saved state dict.

on_task_add(task, **kwargs)Called when new task is added.

on_task_report(task, result)Called by reporter thread once a new result is reported.

run(**kwargs)Run multiple number of trials

run_job(task)Run a training task to the scheduler (Sync).

run_with_config(config)Run with config for final fit.

save([checkpoint])Save Checkpoint

Schedule next searcher suggested task

shutdown()shutdown() is now deprecated in favor of

autogluon.done().state_dict([destination, no_fifo_lock])Returns a dictionary containing a whole state of the Scheduler.

upload_files(files, **kwargs)Upload files to remote machines, so that they are accessible by import or load.

join_tasks

-

add_job(task, **kwargs)[source]¶ Adding a training task to the scheduler.

- Args:

task (

autogluon.scheduler.Task): a new training task- Relevant entries in kwargs:

bracket: HB bracket to be used. Has been sampled in _promote_config

new_config: If True, task starts new config eval, otherwise it promotes a config (only if type == ‘promotion’)

- Only if new_config == False:

config_key: Internal key for config

resume_from: config promoted from this milestone

milestone: config promoted to this milestone (next from resume_from)

-

add_remote(ip_addrs)¶ Add remote nodes to the scheduler computation resource.

-

add_task(task, **kwargs)¶ add_task() is now deprecated in favor of add_job().

-

get_best_task_id()[source]¶ Get the task id that results in the best configuration/best reward.

If there are duplicated configurations, we return the id of the first one.

-

get_training_curves(filename=None, plot=False, use_legend=True)[source]¶ Get Training Curves

- Parameters

- filenamestr

plot : bool use_legend : bool

Examples

>>> scheduler.run() >>> scheduler.join_jobs() >>> scheduler.get_training_curves(plot=True)

-

join_jobs(timeout=None)¶ Wait all scheduled jobs to finish

-

load_state_dict(state_dict)[source]¶ Load from the saved state dict. This can be used to resume an experiment from a checkpoint (see ‘state_dict’ for caveats).

This method must only be called as part of scheduler construction. Calling it in the middle of an experiment can lead to an undefined inner state of scheduler or searcher.

Examples

>>> scheduler.load_state_dict(ag.load('checkpoint.ag'))

-

on_task_add(task, **kwargs)[source]¶ Called when new task is added. Register new task, inform searcher (pending evaluation) and train_fn.

- Parameters

task –

kwargs –

-

on_task_report(task, result)[source]¶ Called by reporter thread once a new result is reported.

- Parameters

task –

result –

- Returns

Should reporter move on? Otherwise, it terminates

-

run_job(task)¶ Run a training task to the scheduler (Sync).

-

run_with_config(config)[source]¶ Run with config for final fit. It launches a single training trial under any fixed values of the hyperparameters. For example, after HPO has identified the best hyperparameter values based on a hold-out dataset, one can use this function to retrain a model with the same hyperparameters on all the available labeled data (including the hold out set). It can also returns other objects or states.

-

shutdown()¶ shutdown() is now deprecated in favor of

autogluon.done().

-

state_dict(destination=None, no_fifo_lock=False)[source]¶ Returns a dictionary containing a whole state of the Scheduler. This is used for checkpointing.

Note that the checkpoint only contains information which has been registered at scheduler and searcher. It does not contain information about currently running jobs, except what they reported before the checkpoint. Therefore, resuming an experiment from a checkpoint is slightly different from continuing the experiment past the checkpoint. The former behaves as if all currently running jobs are terminated at the checkpoint, and new jobs are scheduled from there, starting from scheduler and searcher state according to all information recorded until the checkpoint.

Examples

>>> ag.save(scheduler.state_dict(), 'checkpoint.ag')

-

classmethod

upload_files(files, **kwargs)¶ Upload files to remote machines, so that they are accessible by import or load.

HyperbandScheduler¶

-

class

autogluon.core.scheduler.HyperbandScheduler(train_fn, **kwargs)[source]¶ Implements different variants of asynchronous Hyperband

See ‘type’ for the different variants. One implementation detail is when using multiple brackets, task allocation to bracket is done randomly, based on a distribution inspired by the synchronous Hyperband case.

For definitions of concepts (bracket, rung, milestone), see

Li, Jamieson, Rostamizadeh, Gonina, Hardt, Recht, Talwalkar (2018) A System for Massively Parallel Hyperparameter Tuning https://arxiv.org/abs/1810.05934

or

Tiao, Klein, Lienart, Archambeau, Seeger (2020) Model-based Asynchronous Hyperparameter and Neural Architecture Search https://arxiv.org/abs/2003.10865

Note: This scheduler requires both reward and resource (time) to be returned by the reporter. Here, resource (time) values must be positive int. If time_attr == ‘epoch’, this should be the number of epochs done, starting from 1 (not the epoch number, starting from 0).

Rung levels and promotion quantiles:

Rung levels are values of the resource attribute at which stop/go decisions are made for jobs, comparing their reward against others at the same level. These rung levels (positive, strictly increasing) can be specified via rung_levels, the largest must be <= max_t. If rung_levels is not given, rung levels are specified by grace_period and reduction_factor:

[grace_period * (reduction_factor ** j)], j = 0, 1, …

This is the default choice for successive halving (Hyperband). Note: If rung_levels is given, then grace_period, reduction_factor are ignored. If they are given, a warning is logged.

The rung levels determine the quantiles to be used in the stop/go decisions. If rung levels are r_0, r_1, …, define

q_j = r_j / r_{j+1}

q_j is the promotion quantile at rung level r_j. On average, a fraction of q_j jobs can continue, the remaining ones are stopped (or paused). In the default successive halving case:

q_j = 1 / reduction_factor for all j

- Parameters

- train_fncallable

A task launch function for training.

- argsobject, optional

Default arguments for launching train_fn.

- resourcedict

Computation resources. For example, {‘num_cpus’:2, ‘num_gpus’:1}

- searcherstr or BaseSearcher

Searcher (get_config decisions). If str, this is passed to searcher_factory along with search_options.

- search_optionsdict

If searcher is str, these arguments are passed to searcher_factory.

- checkpointstr

If filename given here, a checkpoint of scheduler (and searcher) state is written to file every time a job finishes. Note: May not be fully supported by all searchers.

- resumebool

If True, scheduler state is loaded from checkpoint, and experiment starts from there. Note: May not be fully supported by all searchers.

- num_trialsint

Maximum number of jobs run in experiment. One of num_trials, time_out must be given.

- time_outfloat

If given, jobs are started only until this time_out (wall clock time). Moreover, we also stop jobs after time_out has passed, when they report a result. One of num_trials, time_out must be given.

- reward_attrstr

Name of reward (i.e., metric to maximize) attribute in data obtained from reporter

- time_attrstr

Name of resource (or time) attribute in data obtained from reporter. Note: The type of resource must be positive int.

- max_tint

Maximum resource (see time_attr) to be used for a job. Together with grace_period and reduction_factor, this is used to determine rung levels in Hyperband brackets (if rung_levels is not given). Note: If this is not given, we try to infer its value from train_fn.args, checking train_fn.args.epochs or train_fn.args.max_t. If max_t is given as argument here, it takes precedence.

- grace_periodint

Minimum resource (see time_attr) to be used for a job. Ignored if rung_levels is given.

- reduction_factorint (>= 2)

Parameter to determine rung levels in successive halving (Hyperband). Ignored if rung_levels is given.

- rung_levels: list of int

If given, prescribes the set of rung levels to be used. Must contain positive integers, strictly increasing. This information overrides grace_period and reduction_factor, but not max_t. Note that the stop/promote rule in the successive halving scheduler is set based on the ratio of successive rung levels.

- bracketsint

Number of brackets to be used in Hyperband. Each bracket has a different grace period, all share max_t and reduction_factor. If brackets == 1, we just run successive halving.

- training_history_callbackcallable

Callback function func called every time a result is added to training_history, if at least training_history_callback_delta_secs seconds passed since the last recent call. See _add_training_result for the signature of this callback function. Use this callback to serialize self.training_history after regular intervals.

- training_history_callback_delta_secsfloat

See training_history_callback.

- training_history_searcher_infobool

If True, information about the current state of the searcher is added to every reported_result before added to training_history. This info includes in particular the current hyperparameters of the surrogate model of the searcher, as well as the dataset size.

- delay_get_configbool

If True, the call to searcher.get_config is delayed until a worker resource for evaluation is available. Otherwise, get_config is called just after a job has been started. For searchers which adapt to past data, True should be preferred. Otherwise, it does not matter.

- stop_jobs_after_time_outbool

Relevant only if time_out is used. If True, jobs which report a metric are stopped once time_out has passed. Otherwise, such jobs are allowed to continue until the end, or until stopped for other reasons. The latter can mean an experiment runs far longer than time_out.

- typestr

- Type of Hyperband scheduler:

- stopping:

A config eval is executed by a single task. The task is stopped at a milestone if its metric is worse than a fraction of those who reached the milestone earlier, otherwise it continues. As implemented in Ray/Tune: https://ray.readthedocs.io/en/latest/tune-schedulers.html#asynchronous-hyperband See

StoppingRungSystem.- promotion:

A config eval may be associated with multiple tasks over its lifetime. It is never terminated, but may be paused. Whenever a task becomes available, it may promote a config to the next milestone, if better than a fraction of others who reached the milestone. If no config can be promoted, a new one is chosen. This variant may benefit from pause&resume, which is not directly supported here. As proposed in this paper (termed ASHA): https://arxiv.org/abs/1810.05934 See

PromotionRungSystem.

- dist_ip_addrslist of str

IP addresses of remote machines.

- searcher_datastr

Relevant only if a model-based searcher is used, and if train_fn is such that we receive results (from the reporter) at each successive resource level, not just at the rung levels. Example: For NN tuning and time_attr == ‘epoch’, we receive a result for each epoch, but not all epoch values are also rung levels. searcher_data determines which of these results are passed to the searcher. As a rule, the more data the searcher receives, the better its fit, but also the more expensive get_config may become. Choices: - ‘rungs’ (default): Only results at rung levels. Cheapest - ‘all’: All results. Most expensive - ‘rungs_and_last’: Results at rung levels, plus the most recent result.

This means that in between rung levels, only the most recent result is used by the searcher. This is in between

- rung_system_per_bracketbool

This concerns Hyperband with brackets > 1. When starting a job for a new config, it is assigned a randomly sampled bracket. The larger the bracket, the larger the grace period for the config. If rung_system_per_bracket is True, we maintain separate rung level systems for each bracket, so that configs only compete with others started in the same bracket. This is the default behaviour of Hyperband. If False, we use a single rung level system, so that all configs compete with each other. In this case, the bracket of a config only determines the initial grace period, i.e. the first milestone at which it starts competing with others. The concept of brackets in Hyperband is meant to hedge against overly aggressive filtering in successive halving, based on low fidelity criteria. In practice, successive halving (i.e., brackets = 1) often works best in the asynchronous case (as implemented here). If brackets > 1, the hedging is stronger if rung_system_per_bracket is True.

- random_seedint

Random seed for PRNG for bracket sampling

- do_snapshotsbool

Support snapshots? If True, a snapshot of all running tasks and rung levels is returned by _promote_config. This snapshot is passed to the searcher in get_config. Note: Currently, only the stopping variant supports snapshots.

See also

HyperbandBracketManager

Examples

>>> import numpy as np >>> import autogluon.core as ag >>> @ag.args( ... lr=ag.space.Real(1e-3, 1e-2, log=True), ... wd=ag.space.Real(1e-3, 1e-2), ... epochs=10) >>> def train_fn(args, reporter): ... print('lr: {}, wd: {}'.format(args.lr, args.wd)) ... for e in range(args.epochs): ... dummy_accuracy = 1 - np.power(1.8, -np.random.uniform(e, 2*e)) ... reporter(epoch=e+1, accuracy=dummy_accuracy, lr=args.lr, wd=args.wd) >>> scheduler = ag.scheduler.HyperbandScheduler( ... train_fn, ... resource={'num_cpus': 2, 'num_gpus': 0}, ... num_trials=20, ... reward_attr='accuracy', ... time_attr='epoch', ... grace_period=1) >>> scheduler.run() >>> scheduler.join_jobs() >>> scheduler.get_training_curves(plot=True)

- Attributes

- num_finished_tasks

Methods

add_job(task, **kwargs)Adding a training task to the scheduler.

add_remote(ip_addrs)Add remote nodes to the scheduler computation resource.

add_task(task, **kwargs)add_task() is now deprecated in favor of add_job().

Get the best configuration from the finished jobs.

Get the best reward from the finished jobs.

Get the task id that results in the best configuration/best reward.

get_training_curves([filename, plot, use_legend])Get Training Curves

join_jobs([timeout])Wait all scheduled jobs to finish

load_state_dict(state_dict)Load from the saved state dict.

on_task_add(task, **kwargs)Called when new task is added.

on_task_report(task, result)Called by reporter thread once a new result is reported.

run(**kwargs)Run multiple number of trials

run_job(task)Run a training task to the scheduler (Sync).

run_with_config(config)Run with config for final fit.

save([checkpoint])Save Checkpoint

Schedule next searcher suggested task

shutdown()shutdown() is now deprecated in favor of

autogluon.done().state_dict([destination, no_fifo_lock])Returns a dictionary containing a whole state of the scheduler

upload_files(files, **kwargs)Upload files to remote machines, so that they are accessible by import or load.

join_tasks

-

add_job(task, **kwargs)¶ Adding a training task to the scheduler.

- Args:

task (

autogluon.scheduler.Task): a new training task- Relevant entries in kwargs:

bracket: HB bracket to be used. Has been sampled in _promote_config

new_config: If True, task starts new config eval, otherwise it promotes a config (only if type == ‘promotion’)

- Only if new_config == False:

config_key: Internal key for config

resume_from: config promoted from this milestone

milestone: config promoted to this milestone (next from resume_from)

-

add_remote(ip_addrs)¶ Add remote nodes to the scheduler computation resource.

-

add_task(task, **kwargs)¶ add_task() is now deprecated in favor of add_job().

-

get_best_config()¶ Get the best configuration from the finished jobs.

-

get_best_reward()¶ Get the best reward from the finished jobs.

-

get_best_task_id()¶ Get the task id that results in the best configuration/best reward.

If there are duplicated configurations, we return the id of the first one.

-

get_training_curves(filename=None, plot=False, use_legend=True)¶ Get Training Curves

- Parameters

- filenamestr

plot : bool use_legend : bool

Examples

>>> scheduler.run() >>> scheduler.join_jobs() >>> scheduler.get_training_curves(plot=True)

-

join_jobs(timeout=None)¶ Wait all scheduled jobs to finish

-

load_state_dict(state_dict)[source]¶ Load from the saved state dict.

Examples

>>> import autogluon.core as ag >>> scheduler.load_state_dict(ag.load('checkpoint.ag'))

-

on_task_add(task, **kwargs)[source]¶ Called when new task is added. Register new task, inform searcher (pending evaluation) and train_fn (resume_from, checkpointing).

Relevant entries in kwargs: - bracket: HB bracket to be used. Has been sampled in _promote_config - new_config: If True, task starts new config eval, otherwise it promotes

a config (only if type == ‘promotion’)

elapsed_time: Time stamp

Only if new_config == False: - config_key: Internal key for config - resume_from: config promoted from this milestone - milestone: config promoted to this milestone (next from resume_from)

- Parameters

task –

kwargs –

-

on_task_report(task, result)[source]¶ Called by reporter thread once a new result is reported.

- Parameters

task –

result –

- Returns

Should reporter move on? Otherwise, it terminates

-

run(**kwargs)¶ Run multiple number of trials

-

run_job(task)¶ Run a training task to the scheduler (Sync).

-

run_with_config(config)¶ Run with config for final fit. It launches a single training trial under any fixed values of the hyperparameters. For example, after HPO has identified the best hyperparameter values based on a hold-out dataset, one can use this function to retrain a model with the same hyperparameters on all the available labeled data (including the hold out set). It can also returns other objects or states.

-

save(checkpoint=None)¶ Save Checkpoint

-

schedule_next()¶ Schedule next searcher suggested task

-

shutdown()¶ shutdown() is now deprecated in favor of

autogluon.done().

-

state_dict(destination=None, no_fifo_lock=False)[source]¶ Returns a dictionary containing a whole state of the scheduler

Examples

>>> import autogluon.core as ag >>> ag.save(scheduler.state_dict(), 'checkpoint.ag')

-

classmethod

upload_files(files, **kwargs)¶ Upload files to remote machines, so that they are accessible by import or load.

RLScheduler¶

-

class

autogluon.core.scheduler.RLScheduler(train_fn, **kwargs)[source]¶ Scheduler that uses Reinforcement Learning with a LSTM controller created based on the provided search spaces

- Parameters

- train_fncallable

A task launch function for training. Note: please add the @ag.args decorater to the original function.

- argsobject (optional)

Default arguments for launching train_fn.

- resourcedict

Computation resources. For example, {‘num_cpus’:2, ‘num_gpus’:1}

- searcherobject (optional)

Autogluon searcher. For example, autogluon.searcher.RandomSearcher

- time_attrstr

A training result attr to use for comparing time. Note that you can pass in something non-temporal such as training_epoch as a measure of progress, the only requirement is that the attribute should increase monotonically.

- reward_attrstr

The training result objective value attribute. As with time_attr, this may refer to any objective value. Stopping procedures will use this attribute.

- controller_resourceint

Batch size for training controllers.

- dist_ip_addrslist of str

IP addresses of remote machines.

Examples

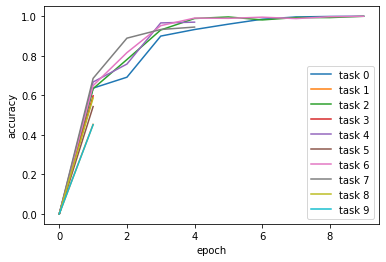

>>> import numpy as np >>> import autogluon.core as ag >>> >>> @ag.args( ... lr=ag.space.Real(1e-3, 1e-2, log=True), ... wd=ag.space.Real(1e-3, 1e-2)) >>> def train_fn(args, reporter): ... print('lr: {}, wd: {}'.format(args.lr, args.wd)) ... for e in range(10): ... dummy_accuracy = 1 - np.power(1.8, -np.random.uniform(e, 2*e)) ... reporter(epoch=e+1, accuracy=dummy_accuracy, lr=args.lr, wd=args.wd) ... >>> scheduler = ag.scheduler.RLScheduler(train_fn, ... resource={'num_cpus': 2, 'num_gpus': 0}, ... num_trials=20, ... reward_attr='accuracy', ... time_attr='epoch') >>> scheduler.run() >>> scheduler.join_jobs() >>> scheduler.get_training_curves(plot=True)

- Attributes

- num_finished_tasks

Methods

add_job(task, **kwargs)Adding a training task to the scheduler.

add_remote(ip_addrs)Add remote nodes to the scheduler computation resource.

add_task(task, **kwargs)add_task() is now deprecated in favor of add_job().

Get the best configuration from the finished jobs.

Get the best reward from the finished jobs.

Get the task id that results in the best configuration/best reward.

get_training_curves([filename, plot, use_legend])Get Training Curves

join_jobs([timeout])Wait all scheduled jobs to finish

load_state_dict(state_dict)Load from the saved state dict.

on_task_add(task, **kwargs)Called when new task is added.

on_task_report(task, result)Called by reporter thread once a new result is reported.

run(**kwargs)Run multiple number of trials

run_job(task)Run a training task to the scheduler (Sync).

run_with_config(config)Run with config for final fit.

save([checkpoint])Save Checkpoint

Schedule next searcher suggested task

shutdown()shutdown() is now deprecated in favor of

autogluon.done().state_dict([destination])Returns a dictionary containing a whole state of the Scheduler

upload_files(files, **kwargs)Upload files to remote machines, so that they are accessible by import or load.

join_tasks

sync_schedule_tasks

-

add_job(task, **kwargs)[source]¶ Adding a training task to the scheduler.

- Args:

task (

autogluon.scheduler.Task): a new training task

-

add_remote(ip_addrs)¶ Add remote nodes to the scheduler computation resource.

-

add_task(task, **kwargs)¶ add_task() is now deprecated in favor of add_job().

-

get_best_config()¶ Get the best configuration from the finished jobs.

-

get_best_reward()¶ Get the best reward from the finished jobs.

-

get_best_task_id()¶ Get the task id that results in the best configuration/best reward.

If there are duplicated configurations, we return the id of the first one.

-

get_training_curves(filename=None, plot=False, use_legend=True)¶ Get Training Curves

- Parameters

- filenamestr

plot : bool use_legend : bool

Examples

>>> scheduler.run() >>> scheduler.join_jobs() >>> scheduler.get_training_curves(plot=True)

-

join_jobs(timeout=None)¶ Wait all scheduled jobs to finish

-

load_state_dict(state_dict)[source]¶ Load from the saved state dict.

Examples

>>> scheduler.load_state_dict(ag.load('checkpoint.ag'))

-

on_task_add(task, **kwargs)¶ Called when new task is added. Register new task, inform searcher (pending evaluation) and train_fn.

- Parameters

task –

kwargs –

-

on_task_report(task, result)¶ Called by reporter thread once a new result is reported.

- Parameters

task –

result –

- Returns

Should reporter move on? Otherwise, it terminates

-

run_job(task)¶ Run a training task to the scheduler (Sync).

-

run_with_config(config)¶ Run with config for final fit. It launches a single training trial under any fixed values of the hyperparameters. For example, after HPO has identified the best hyperparameter values based on a hold-out dataset, one can use this function to retrain a model with the same hyperparameters on all the available labeled data (including the hold out set). It can also returns other objects or states.

-

save(checkpoint=None)¶ Save Checkpoint

-

schedule_next()¶ Schedule next searcher suggested task

-

shutdown()¶ shutdown() is now deprecated in favor of

autogluon.done().

-

state_dict(destination=None)[source]¶ Returns a dictionary containing a whole state of the Scheduler

Examples

>>> ag.save(scheduler.state_dict(), 'checkpoint.ag')

-

classmethod

upload_files(files, **kwargs)¶ Upload files to remote machines, so that they are accessible by import or load.