autogluon.core.searcher¶

Example

Define a dummy training function with searchable spaces:

>>> import numpy as np

>>> import autogluon.core as ag

>>> @ag.args(

... lr=ag.space.Real(1e-3, 1e-2, log=True),

... wd=ag.space.Real(1e-3, 1e-2))

>>> def train_fn(args, reporter):

... print('lr: {}, wd: {}'.format(args.lr, args.wd))

... for e in range(10):

... dummy_accuracy = 1 - np.power(1.8, -np.random.uniform(e, 2*e))

... reporter(epoch=e+1, accuracy=dummy_accuracy, lr=args.lr, wd=args.wd)

Note that epoch returned by reporter must be the number of epochs done, and start with 1. Recall that a searcher is used by a scheduler in order to suggest configurations to evaluate. Create a searcher and sample one configuration:

>>> searcher = ag.searcher.GPFIFOSearcher(train_fn.cs)

>>> searcher.get_config()

{'lr': 0.0031622777, 'wd': 0.0055}

Create a scheduler and run this toy experiment:

>>> scheduler = ag.scheduler.FIFOScheduler(train_fn,

... searcher=searcher,

... resource={'num_cpus': 2, 'num_gpus': 0},

... num_trials=10,

... reward_attr='accuracy',

... time_attr='epoch')

>>> scheduler.run()

Note that reward_attr and time_attr must correspond to the keys used in the reporter callback in train_fn.

When working with FIFOScheduler or HyperbandScheduler, it is strongly recommended to have the scheduler create its searcher (by specifying searcher (string identifier) and search_options), instead of creating the searcher object by hand:

>>> scheduler = ag.scheduler.FIFOScheduler(train_fn,

... searcher='bayesopt',

... resource={'num_cpus': 2, 'num_gpus': 0},

... num_trials=10,

... reward_attr='accuracy',

... time_attr='epoch')

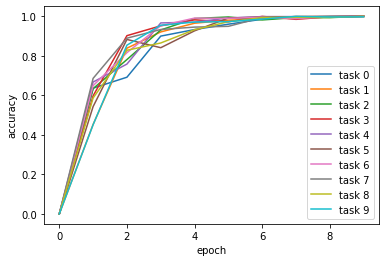

Visualize the results:

>>> scheduler.get_training_curves(plot=True)

Searchers¶

Searcher which randomly samples configurations to try next. |

|

SKopt Searcher that uses Bayesian optimization to suggest new hyperparameter configurations. |

|

Gaussian process Bayesian optimization for FIFO scheduler |

|

Gaussian process Bayesian optimization for Hyperband scheduler |

|

Grid Searcher that exhaustively tries all possible configurations. |

|

Reinforcement Learning Searcher for ConfigSpace |

RandomSearcher¶

-

class

autogluon.core.searcher.RandomSearcher(configspace, **kwargs)[source]¶ Searcher which randomly samples configurations to try next.

- Parameters

- configspace: ConfigSpace.ConfigurationSpace

The configuration space to sample from. It contains the full specification of the set of hyperparameter values (with optional prior distributions over these values).

Examples

By default, the searcher is created along with the scheduler. For example:

>>> import autogluon.core as ag >>> @ag.args( ... lr=ag.space.Real(1e-3, 1e-2, log=True)) >>> def train_fn(args, reporter): ... reporter(accuracy = args.lr ** 2) >>> scheduler = ag.scheduler.FIFOScheduler( ... train_fn, searcher='random', num_trials=10, ... reward_attr='accuracy')

This would result in a BaseSearcher with cs = train_fn.cs. You can also create a RandomSearcher by hand:

>>> import ConfigSpace as CS >>> import ConfigSpace.hyperparameters as CSH >>> # create configuration space >>> cs = CS.ConfigurationSpace() >>> lr = CSH.UniformFloatHyperparameter('lr', lower=1e-4, upper=1e-1, log=True) >>> cs.add_hyperparameter(lr) >>> # create searcher >>> searcher = RandomSearcher(cs) >>> searcher.get_config()

- Attributes

debug_logSome BaseSearcher subclasses support writing a debug log, using DebugLogPrinter.

Methods

clone_from_state(state)Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

configure_scheduler(scheduler)Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves.

If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

- return

Size of dataset a model is fitted to, or 0 if no model is

evaluation_failed(config, **kwargs)Called by scheduler if an evaluation job for config failed.

Returns the best configuration found so far.

Returns the best configuration found so far, as well as the reward associated with this best config.

Calculates the reward (i.e.

get_config(**kwargs)Sample a new configuration at random

get_reward(config)Calculates the reward (i.e.

Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

- return

Dictionary with current model (hyper)parameter values if

register_pending(config[, milestone])Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending.

remove_case(config, **kwargs)Remove data case previously appended by update

update(config, **kwargs)Update the searcher with the newest metric report

-

clone_from_state(state)[source]¶ Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

Given state as returned by get_state, this method combines the non-pickle-able part of the immutable state from self with state and returns the corresponding searcher clone. Afterwards, self is not used anymore.

If the searcher object as such is already pickle-able, then state is already the new searcher object, and the default is just returning it. In this default, self is ignored.

- Parameters

state – See above

- Returns

New searcher object

-

configure_scheduler(scheduler)[source]¶ Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves. This method has to be called before the searcher can be used.

The implementation here sets _reward_attribute for schedulers which specify it.

- Args:

- scheduler: TaskScheduler

Scheduler the searcher is used with.

-

cumulative_profile_record()¶ If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

-

dataset_size()¶ - Returns

Size of dataset a model is fitted to, or 0 if no model is fitted to data

-

property

debug_log¶ Some BaseSearcher subclasses support writing a debug log, using DebugLogPrinter. See RandomSearcher for an example.

- Returns

DebugLogPrinter; or None (not supported)

-

evaluation_failed(config, **kwargs)¶ Called by scheduler if an evaluation job for config failed. The searcher should react appropriately (e.g., remove pending evaluations for this config, and blacklist config).

-

get_best_config()¶ Returns the best configuration found so far.

-

get_best_config_reward()¶ Returns the best configuration found so far, as well as the reward associated with this best config.

-

get_best_reward()¶ Calculates the reward (i.e. validation performance) produced by training under the best configuration identified so far. Assumes higher reward values indicate better performance.

-

get_config(**kwargs)[source]¶ Sample a new configuration at random

- Returns

- A new configuration that is valid.

-

get_reward(config)¶ Calculates the reward (i.e. validation performance) produced by training with the given configuration.

-

get_state()[source]¶ Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

The state returned here must be pickle-able. If the searcher object is pickle-able, the default is returning self.

- Returns

Pickle-able mutable state of searcher

-

model_parameters()¶ - Returns

Dictionary with current model (hyper)parameter values if this is supported; otherwise empty

-

register_pending(config, milestone=None)¶ Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending. For multi-fidelity schedulers, milestone is the next milestone the evaluation will attend, so that model registers (config, milestone) as pending. In general, the searcher may assume that update is called with that config at a later time.

-

remove_case(config, **kwargs)¶ Remove data case previously appended by update

For searchers which maintain the dataset of all cases (reports) passed to update, this method allows to remove one case from the dataset.

-

update(config, **kwargs)[source]¶ Update the searcher with the newest metric report

kwargs must include the reward (key == reward_attribute). For multi-fidelity schedulers (e.g., Hyperband), intermediate results are also reported. In this case, kwargs must also include the resource (key == resource_attribute). We can also assume that if register_pending(config, …) is received, then later on, the searcher receives update(config, …) with milestone as resource.

Note that for Hyperband scheduling, update is also called for intermediate results. _results is updated in any case, if the new reward value is larger than the previously recorded one. This implies that the best value for a config (in _results) could be obtained for an intermediate resource, not the final one (virtue of early stopping). Full details can be reconstruction from training_history of the scheduler.

SKoptSearcher¶

-

class

autogluon.core.searcher.SKoptSearcher(configspace, **kwargs)[source]¶ - SKopt Searcher that uses Bayesian optimization to suggest new hyperparameter configurations.

Requires that ‘scikit-optimize’ package is installed.

- Parameters

- configspace: ConfigSpace.ConfigurationSpace

The configuration space to sample from. It contains the full specification of the Hyperparameters with their priors

- kwargs: Optional arguments passed to skopt.optimizer.Optimizer class.

Please see documentation at this link: skopt.optimizer.Optimizer These kwargs be used to specify which surrogate model Bayesian optimization should rely on, which acquisition function to use, how to optimize the acquisition function, etc. The skopt library provides comprehensive Bayesian optimization functionality, where popular non-default kwargs options here might include:

base_estimator = ‘GP’ or ‘RF’ or ‘ET’ or ‘GBRT’ (to specify different surrogate models like Gaussian Processes, Random Forests, etc)

acq_func = ‘LCB’ or ‘EI’ or ‘PI’ or ‘gp_hedge’ (to specify different acquisition functions like Lower Confidence Bound, Expected Improvement, etc)

For example, we can tell our Searcher to perform Bayesian optimization with a Random Forest surrogate model and use the Expected Improvement acquisition function by invoking: SKoptSearcher(cs, base_estimator=’RF’, acq_func=’EI’)

Examples

By default, the searcher is created along with the scheduler. For example:

>>> import autogluon.core as ag >>> @ag.args( ... lr=ag.space.Real(1e-3, 1e-2, log=True)) >>> def train_fn(args, reporter): ... reporter(accuracy = args.lr ** 2) >>> search_options = {'base_estimator': 'RF', 'acq_func': 'EI'} >>> scheduler = ag.scheduler.FIFOScheduler( ... train_fn, searcher='skopt', search_options=search_options, ... num_trials=10, reward_attr='accuracy')

This would result in a SKoptSearcher with cs = train_fn.cs. You can also create a SKoptSearcher by hand:

>>> import autogluon.core as ag >>> @ag.args( ... lr=ag.space.Real(1e-3, 1e-2, log=True), ... wd=ag.space.Real(1e-3, 1e-2)) >>> def train_fn(args, reporter): ... pass >>> searcher = ag.searcher.SKoptSearcher(train_fn.cs) >>> searcher.get_config() {'lr': 0.0031622777, 'wd': 0.0055} >>> searcher = SKoptSearcher( ... train_fn.cs, reward_attribute='accuracy', base_estimator='RF', ... acq_func='EI') >>> next_config = searcher.get_config() >>> searcher.update(next_config, accuracy=10.0) # made-up value

Note

get_config() cannot ensure valid configurations for conditional spaces since skopt

does not contain this functionality as it is not integrated with ConfigSpace. If invalid config is produced, SKoptSearcher.get_config() will catch these Exceptions and revert to random_config() instead.

get_config(max_tries) uses skopt’s batch BayesOpt functionality to query at most

max_tries number of configs to try out. If all of these have configs have already been scheduled to try (might happen in asynchronous setting), then get_config simply reverts to random search via random_config().

- Attributes

debug_logSome BaseSearcher subclasses support writing a debug log, using DebugLogPrinter.

Methods

clone_from_state(state)Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

config2skopt(config)Converts autogluon config (dict object) to skopt format (list object).

configure_scheduler(scheduler)Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves.

If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

- return

Size of dataset a model is fitted to, or 0 if no model is

Function to return the default configuration that should be tried first.

evaluation_failed(config, **kwargs)Called by scheduler if an evaluation job for config failed.

Returns the best configuration found so far.

Returns the best configuration found so far, as well as the reward associated with this best config.

Calculates the reward (i.e.

get_config(**kwargs)Function to sample a new configuration This function is called to query a new configuration that has not yet been tried.

get_reward(config)Calculates the reward (i.e.

Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

- return

Dictionary with current model (hyper)parameter values if

Function to randomly sample a new configuration (which is ensured to be valid in the case of conditional hyperparameter spaces).

register_pending(config[, milestone])Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending.

remove_case(config, **kwargs)Remove data case previously appended by update

skopt2config(point)Converts skopt point (list object) to autogluon config format (dict object.

update(config, **kwargs)Update the searcher with the newest metric report.

-

clone_from_state(state)¶ Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

Given state as returned by get_state, this method combines the non-pickle-able part of the immutable state from self with state and returns the corresponding searcher clone. Afterwards, self is not used anymore.

If the searcher object as such is already pickle-able, then state is already the new searcher object, and the default is just returning it. In this default, self is ignored.

- Parameters

state – See above

- Returns

New searcher object

-

config2skopt(config)[source]¶ Converts autogluon config (dict object) to skopt format (list object).

- Returns

- Object of same type as: skOpt.Optimizer.ask()

-

configure_scheduler(scheduler)[source]¶ Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves. This method has to be called before the searcher can be used.

The implementation here sets _reward_attribute for schedulers which specify it.

- Args:

- scheduler: TaskScheduler

Scheduler the searcher is used with.

-

cumulative_profile_record()¶ If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

-

dataset_size()¶ - Returns

Size of dataset a model is fitted to, or 0 if no model is fitted to data

-

property

debug_log¶ Some BaseSearcher subclasses support writing a debug log, using DebugLogPrinter. See RandomSearcher for an example.

- Returns

DebugLogPrinter; or None (not supported)

-

default_config()[source]¶ Function to return the default configuration that should be tried first.

- Returns

- returns: config

-

evaluation_failed(config, **kwargs)¶ Called by scheduler if an evaluation job for config failed. The searcher should react appropriately (e.g., remove pending evaluations for this config, and blacklist config).

-

get_best_config()¶ Returns the best configuration found so far.

-

get_best_config_reward()¶ Returns the best configuration found so far, as well as the reward associated with this best config.

-

get_best_reward()¶ Calculates the reward (i.e. validation performance) produced by training under the best configuration identified so far. Assumes higher reward values indicate better performance.

-

get_config(**kwargs)[source]¶ Function to sample a new configuration This function is called to query a new configuration that has not yet been tried. Asks for one point at a time from skopt, up to max_tries. If an invalid hyperparameter configuration is proposed by skopt, then reverts to random search (since skopt configurations cannot handle conditional spaces like ConfigSpace can). TODO: may loop indefinitely due to no termination condition (like RandomSearcher.get_config() )

- Parameters

- max_tries: int, default = 1e2

The maximum number of tries to ask for a unique config from skopt before reverting to random search.

-

get_reward(config)¶ Calculates the reward (i.e. validation performance) produced by training with the given configuration.

-

get_state()¶ Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

The state returned here must be pickle-able. If the searcher object is pickle-able, the default is returning self.

- Returns

Pickle-able mutable state of searcher

-

model_parameters()¶ - Returns

Dictionary with current model (hyper)parameter values if this is supported; otherwise empty

-

random_config()[source]¶ Function to randomly sample a new configuration (which is ensured to be valid in the case of conditional hyperparameter spaces).

-

register_pending(config, milestone=None)¶ Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending. For multi-fidelity schedulers, milestone is the next milestone the evaluation will attend, so that model registers (config, milestone) as pending. In general, the searcher may assume that update is called with that config at a later time.

-

remove_case(config, **kwargs)¶ Remove data case previously appended by update

For searchers which maintain the dataset of all cases (reports) passed to update, this method allows to remove one case from the dataset.

GPFIFOSearcher¶

-

class

autogluon.core.searcher.GPFIFOSearcher(configspace, **kwargs)[source]¶ Gaussian process Bayesian optimization for FIFO scheduler

This searcher must be used with FIFOScheduler. It provides Bayesian optimization, based on a Gaussian process surrogate model. It is created along with the scheduler, using searcher=’bayesopt’:

Pending configurations (for which evaluation tasks are currently running) are dealt with by fantasizing (i.e., target values are drawn from the current posterior, and acquisition functions are averaged over this sample, see num_fantasy_samples). The GP surrogate model uses a Matern 5/2 covariance function with automatic relevance determination (ARD) of input attributes, and a constant mean function. The acquisition function is expected improvement (EI). All hyperparameters of the surrogate model are estimated by empirical Bayes (maximizing the marginal likelihood). In general, this hyperparameter fitting is the most expensive part of a get_config call.

The following happens in get_config. For the first num_init_random calls, a config is drawn at random (the very first call results in the default config of the space). Afterwards, Bayesian optimization is used, unless there are no finished evaluations yet. First, model hyperparameter are refit. This step can be skipped (see opt_skip* parameters). Next, num_init_candidates configs are sampled at random, and ranked by a scoring function (initial_scoring). BFGS local optimization is then run starting from the top scoring config, where EI is minimized.

- Parameters

- configspaceConfigSpace.ConfigurationSpace

Config space of train_fn, equal to train_fn.cs

- reward_attributestr

Name of reward attribute reported by train_fn, equal to reward_attr of `scheduler

- debug_logbool (default: False)

If True, both searcher and scheduler output an informative log, from which the configs chosen and decisions being made can be traced.

- first_is_defaultbool (default: True)

If True, the first config to be evaluated is the default one of the config space. Otherwise, this first config is drawn at random.

- elapsed_time_attributestr (optional)

Name of elapsed time attribute in data obtained from reporter. Here, elapsed time counts since the start of train_fn, unit is seconds.

- random_seedint

Seed for pseudo-random number generator used.

- num_init_randomint

Number of initial get_config calls for which randomly sampled configs are returned. Afterwards, Bayesian optimization is used

- num_init_candidatesint

Number of initial candidates sampled at random in order to seed the search for get_config

- num_fantasy_samplesint

Number of samples drawn for fantasizing (latent target values for pending candidates)

- initial_scoringstr

Scoring function to rank initial candidates (local optimization of EI is started from top scorer). Values are ‘thompson_indep’ (independent Thompson sampling; randomized score, which can increase exploration), ‘acq_func’ (score is the same (EI) acquisition function which is afterwards locally optimized).

- opt_nstartsint

Parameter for hyperparameter fitting. Number of random restarts

- opt_maxiterint

Parameter for hyperparameter fitting. Maximum number of iterations per restart

- opt_warmstartbool

Parameter for hyperparameter fitting. If True, each fitting is started from the previous optimum. Not recommended in general

- opt_verbosebool

Parameter for hyperparameter fitting. If True, lots of output

- opt_skip_init_lengthint

Parameter for hyperparameter fitting, skip predicate. Fitting is never skipped as long as number of observations below this threshold

- opt_skip_periodint

Parameter for hyperparameter fitting, skip predicate. If >1, and number of observations above opt_skip_init_length, fitting is done only K-th call, and skipped otherwise

- map_rewardstr or MapReward (default: ‘1_minus_x’)

AutoGluon is maximizing reward, while internally, Bayesian optimization is minimizing the criterion. States how reward is mapped to criterion. This must a strictly decreasing function. Values are ‘1_minus_x’ (criterion = 1 - reward), ‘minus_x’ (criterion = -reward). From a technical standpoint, it does not matter what is chosen here, because criterion is only used internally. Also note that criterion data is always normalized to mean 0, variance 1 before fitted with a GP.

Examples

>>> import autogluon.core as ag >>> @ag.args( ... lr=ag.space.Real(1e-3, 1e-2, log=True)) >>> def train_fn(args, reporter): ... reporter(accuracy = args.lr ** 2) >>> searcher_options = { ... 'map_reward': 'minus_x', ... 'opt_skip_period': 2} >>> scheduler = ag.scheduler.FIFOScheduler( ... train_fn, searcher='bayesopt', searcher_options=searcher_options, ... num_trials=10, reward_attr='accuracy')

- Attributes

debug_logSome BaseSearcher subclasses support writing a debug log, using DebugLogPrinter.

Methods

clone_from_state(state)Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

configure_scheduler(scheduler)Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves.

If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

- return

Size of dataset a model is fitted to, or 0 if no model is

evaluation_failed(config, **kwargs)Called by scheduler if an evaluation job for config failed.

Returns the best configuration found so far.

Returns the best configuration found so far, as well as the reward associated with this best config.

Calculates the reward (i.e.

get_config(**kwargs)Function to sample a new configuration

get_reward(config)Calculates the reward (i.e.

Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

- return

Dictionary with current model (hyper)parameter values if

register_pending(config[, milestone])Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending.

remove_case(config, **kwargs)Remove data case previously appended by update

update(config, **kwargs)Update the searcher with the newest metric report

set_getconfig_callback

set_profiler

-

clone_from_state(state)[source]¶ Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

Given state as returned by get_state, this method combines the non-pickle-able part of the immutable state from self with state and returns the corresponding searcher clone. Afterwards, self is not used anymore.

If the searcher object as such is already pickle-able, then state is already the new searcher object, and the default is just returning it. In this default, self is ignored.

- Parameters

state – See above

- Returns

New searcher object

-

configure_scheduler(scheduler)[source]¶ Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves. This method has to be called before the searcher can be used.

The implementation here sets _reward_attribute for schedulers which specify it.

- Args:

- scheduler: TaskScheduler

Scheduler the searcher is used with.

-

cumulative_profile_record()[source]¶ If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

-

dataset_size()[source]¶ - Returns

Size of dataset a model is fitted to, or 0 if no model is

fitted to data

-

property

debug_log¶ Some BaseSearcher subclasses support writing a debug log, using DebugLogPrinter. See RandomSearcher for an example.

- Returns

DebugLogPrinter; or None (not supported)

-

evaluation_failed(config, **kwargs)[source]¶ Called by scheduler if an evaluation job for config failed. The searcher should react appropriately (e.g., remove pending evaluations for this config, and blacklist config).

-

get_best_config()¶ Returns the best configuration found so far.

-

get_best_config_reward()¶ Returns the best configuration found so far, as well as the reward associated with this best config.

-

get_best_reward()¶ Calculates the reward (i.e. validation performance) produced by training under the best configuration identified so far. Assumes higher reward values indicate better performance.

-

get_config(**kwargs)[source]¶ Function to sample a new configuration

This function is called inside TaskScheduler to query a new configuration

Args: kwargs:

Extra information may be passed from scheduler to searcher

- returns: (config, info_dict)

must return a valid configuration and a (possibly empty) info dict

-

get_reward(config)¶ Calculates the reward (i.e. validation performance) produced by training with the given configuration.

-

get_state()[source]¶ Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

The state returned here must be pickle-able. If the searcher object is pickle-able, the default is returning self.

- Returns

Pickle-able mutable state of searcher

-

model_parameters()[source]¶ - Returns

Dictionary with current model (hyper)parameter values if

this is supported; otherwise empty

-

register_pending(config, milestone=None)[source]¶ Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending. For multi-fidelity schedulers, milestone is the next milestone the evaluation will attend, so that model registers (config, milestone) as pending. In general, the searcher may assume that update is called with that config at a later time.

-

remove_case(config, **kwargs)¶ Remove data case previously appended by update

For searchers which maintain the dataset of all cases (reports) passed to update, this method allows to remove one case from the dataset.

-

update(config, **kwargs)[source]¶ Update the searcher with the newest metric report

kwargs must include the reward (key == reward_attribute). For multi-fidelity schedulers (e.g., Hyperband), intermediate results are also reported. In this case, kwargs must also include the resource (key == resource_attribute). We can also assume that if register_pending(config, …) is received, then later on, the searcher receives update(config, …) with milestone as resource.

Note that for Hyperband scheduling, update is also called for intermediate results. _results is updated in any case, if the new reward value is larger than the previously recorded one. This implies that the best value for a config (in _results) could be obtained for an intermediate resource, not the final one (virtue of early stopping). Full details can be reconstruction from training_history of the scheduler.

GPMultiFidelitySearcher¶

-

class

autogluon.core.searcher.GPMultiFidelitySearcher(configspace, **kwargs)[source]¶ Gaussian process Bayesian optimization for Hyperband scheduler

This searcher must be used with HyperbandScheduler. It provides a novel combination of Bayesian optimization, based on a Gaussian process surrogate model, with Hyperband scheduling. In particular, observations across resource levels are modelled jointly. It is created along with the scheduler, using searcher=’bayesopt’:

Most of GPFIFOSearcher comments apply here as well. In multi-fidelity HPO, we optimize a function f(x, r), x the configuration, r the resource (or time) attribute. The latter must be a positive integer. In most applications, time_attr == ‘epoch’, and the resource is the number of epochs already trained.

We model the function f(x, r) jointly over all resource levels r at which it is observed (but see searcher_data in HyperbandScheduler). The kernel and mean function of our surrogate model are over (x, r). The surrogate model is selected by gp_resource_kernel. More details about the supported kernels is in:

Tiao, Klein, Lienart, Archambeau, Seeger (2020) Model-based Asynchronous Hyperparameter and Neural Architecture Search https://arxiv.org/abs/2003.10865

The acquisition function (EI) which is optimized in get_config, is obtained by fixing the resource level r to a value which is determined depending on the current state. If resource_acq == ‘bohb’, r is the largest value <= max_t, where we have seen >= dimension(x) metric values. If resource_acq == ‘first’, r is the first milestone which config x would reach when started.

- Parameters

- configspaceConfigSpace.ConfigurationSpace

Config space of train_fn, equal to train_fn.cs

- reward_attributestr

Name of reward attribute reported by train_fn, equal to reward_attr of scheduler

- resource_attributestr

Name of resource (or time) attribute reported by train_fn, equal to time_attr of scheduler

- debug_logbool (default: False)

If True, both searcher and scheduler output an informative log, from which the configs chosen and decisions being made can be traced.

- first_is_defaultbool (default: True)

If True, the first config to be evaluated is the default one of the config space. Otherwise, this first config is drawn at random.

- elapsed_time_attributestr (optional)

Name of elapsed time attribute in data obtained from reporter. Here, elapsed time counts since the start of train_fn, unit is seconds.

- random_seedint

Seed for pseudo-random number generator used.

- num_init_randomint

See GPFIFOSearcher

- num_init_candidatesint

See GPFIFOSearcher

- num_fantasy_samplesint

See GPFIFOSearcher

- initial_scoringstr

See GPFIFOSearcher

- opt_nstartsint

See GPFIFOSearcher

- opt_maxiterint

See GPFIFOSearcher

- opt_warmstartbool

See GPFIFOSearcher

- opt_verbosebool

See GPFIFOSearcher

- opt_skip_init_lengthint

See GPFIFOSearcher

- opt_skip_periodint

See GPFIFOSearcher

- map_rewardstr or MapReward (default: ‘1_minus_x’)

See GPFIFOSearcher

- gp_resource_kernelstr

Surrogate model over criterion function f(x, r), x the config, r the resource. Note that x is encoded to be a vector with entries in [0, 1], and r is linearly mapped to [0, 1], while the criterion data is normalized to mean 0, variance 1. The reference above provides details on the models supported here. For the exponential decay kernel, the base kernel over x is Matern 5/2 ARD. Values are ‘matern52’ (Matern 5/2 ARD kernel over [x, r]), ‘matern52-res-warp’ (Matern 5/2 ARD kernel over [x, r], with additional warping on r), ‘exp-decay-sum’ (exponential decay kernel, with delta=0. This is the additive kernel from Freeze-Thaw Bayesian Optimization), ‘exp-decay-delta1’ (exponential decay kernel, with delta=1), ‘exp-decay-combined’ (exponential decay kernel, with delta in [0, 1] a hyperparameter).

- resource_acqstr

Determines how the EI acquisition function is used (see above). Values: ‘bohb’, ‘first’

- opt_skip_num_max_resourcebool

Parameter for hyperparameter fitting, skip predicate. If True, and number of observations above opt_skip_init_length, fitting is done only when there is a new datapoint at r = max_t, and skipped otherwise.

See also

Examples

>>> import numpy as np >>> import autogluon.core as ag >>> >>> @ag.args( ... lr=ag.space.Real(1e-3, 1e-2, log=True), ... wd=ag.space.Real(1e-3, 1e-2)) >>> def train_fn(args, reporter): ... print('lr: {}, wd: {}'.format(args.lr, args.wd)) ... for e in range(9): ... dummy_accuracy = 1 - np.power(1.8, -np.random.uniform(e, 2*e)) ... reporter(epoch=e+1, accuracy=dummy_accuracy, lr=args.lr, ... wd=args.wd) >>> searcher_options = { ... 'gp_resource_kernel': 'matern52-res-warp', ... 'opt_skip_num_max_resource': True} >>> scheduler = ag.scheduler.HyperbandScheduler( ... train_fn, searcher='bayesopt', searcher_options=searcher_options, ... num_trials=10, reward_attr='accuracy', time_attr='epoch', ... max_t=10, grace_period=1, reduction_factor=3)

- Attributes

debug_logSome BaseSearcher subclasses support writing a debug log, using DebugLogPrinter.

Methods

clone_from_state(state)Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

configure_scheduler(scheduler)Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves.

If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

- return

Size of dataset a model is fitted to, or 0 if no model is

evaluation_failed(config, **kwargs)Called by scheduler if an evaluation job for config failed.

Returns the best configuration found so far.

Returns the best configuration found so far, as well as the reward associated with this best config.

Calculates the reward (i.e.

get_config(**kwargs)Function to sample a new configuration

get_reward(config)Calculates the reward (i.e.

Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

- return

Dictionary with current model (hyper)parameter values if

register_pending(config[, milestone])Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending.

remove_case(config, **kwargs)Remove data case previously appended by update

update(config, **kwargs)Update the searcher with the newest metric report

set_getconfig_callback

set_profiler

-

clone_from_state(state)[source]¶ Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

Given state as returned by get_state, this method combines the non-pickle-able part of the immutable state from self with state and returns the corresponding searcher clone. Afterwards, self is not used anymore.

If the searcher object as such is already pickle-able, then state is already the new searcher object, and the default is just returning it. In this default, self is ignored.

- Parameters

state – See above

- Returns

New searcher object

-

configure_scheduler(scheduler)[source]¶ Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves. This method has to be called before the searcher can be used.

The implementation here sets _reward_attribute for schedulers which specify it.

- Args:

- scheduler: TaskScheduler

Scheduler the searcher is used with.

-

cumulative_profile_record()[source]¶ If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

-

dataset_size()[source]¶ - Returns

Size of dataset a model is fitted to, or 0 if no model is

fitted to data

-

property

debug_log¶ Some BaseSearcher subclasses support writing a debug log, using DebugLogPrinter. See RandomSearcher for an example.

- Returns

DebugLogPrinter; or None (not supported)

-

evaluation_failed(config, **kwargs)[source]¶ Called by scheduler if an evaluation job for config failed. The searcher should react appropriately (e.g., remove pending evaluations for this config, and blacklist config).

-

get_best_config()¶ Returns the best configuration found so far.

-

get_best_config_reward()¶ Returns the best configuration found so far, as well as the reward associated with this best config.

-

get_best_reward()¶ Calculates the reward (i.e. validation performance) produced by training under the best configuration identified so far. Assumes higher reward values indicate better performance.

-

get_config(**kwargs)[source]¶ Function to sample a new configuration

This function is called inside TaskScheduler to query a new configuration

Args: kwargs:

Extra information may be passed from scheduler to searcher

- returns: (config, info_dict)

must return a valid configuration and a (possibly empty) info dict

-

get_reward(config)¶ Calculates the reward (i.e. validation performance) produced by training with the given configuration.

-

get_state()[source]¶ Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

The state returned here must be pickle-able. If the searcher object is pickle-able, the default is returning self.

- Returns

Pickle-able mutable state of searcher

-

model_parameters()[source]¶ - Returns

Dictionary with current model (hyper)parameter values if

this is supported; otherwise empty

-

register_pending(config, milestone=None)[source]¶ Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending. For multi-fidelity schedulers, milestone is the next milestone the evaluation will attend, so that model registers (config, milestone) as pending. In general, the searcher may assume that update is called with that config at a later time.

-

remove_case(config, **kwargs)[source]¶ Remove data case previously appended by update

For searchers which maintain the dataset of all cases (reports) passed to update, this method allows to remove one case from the dataset.

-

update(config, **kwargs)[source]¶ Update the searcher with the newest metric report

kwargs must include the reward (key == reward_attribute). For multi-fidelity schedulers (e.g., Hyperband), intermediate results are also reported. In this case, kwargs must also include the resource (key == resource_attribute). We can also assume that if register_pending(config, …) is received, then later on, the searcher receives update(config, …) with milestone as resource.

Note that for Hyperband scheduling, update is also called for intermediate results. _results is updated in any case, if the new reward value is larger than the previously recorded one. This implies that the best value for a config (in _results) could be obtained for an intermediate resource, not the final one (virtue of early stopping). Full details can be reconstruction from training_history of the scheduler.

GridSearcher¶

-

class

autogluon.core.searcher.GridSearcher(configspace, **kwargs)[source]¶ - Grid Searcher that exhaustively tries all possible configurations.

This Searcher can only be used for discrete search spaces of type

autogluon.space.Categorical

Examples

>>> import autogluon.core as ag >>> @ag.args( ... x=ag.space.Categorical(0, 1, 2), ... y=ag.space.Categorical('a', 'b', 'c')) >>> def train_fn(args, reporter): ... pass >>> searcher = ag.searcher.GridSearcher(train_fn.cs) >>> searcher.get_config() Number of configurations for grid search is 9 {'x.choice': 2, 'y.choice': 2}

- Attributes

debug_logSome BaseSearcher subclasses support writing a debug log, using DebugLogPrinter.

Methods

clone_from_state(state)Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

configure_scheduler(scheduler)Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves.

If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

- return

Size of dataset a model is fitted to, or 0 if no model is

evaluation_failed(config, **kwargs)Called by scheduler if an evaluation job for config failed.

Returns the best configuration found so far.

Returns the best configuration found so far, as well as the reward associated with this best config.

Calculates the reward (i.e.

Return new hyperparameter configuration to try next.

get_reward(config)Calculates the reward (i.e.

Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

- return

Dictionary with current model (hyper)parameter values if

register_pending(config[, milestone])Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending.

remove_case(config, **kwargs)Remove data case previously appended by update

update(config, **kwargs)Update the searcher with the newest metric report

-

clone_from_state(state)¶ Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

Given state as returned by get_state, this method combines the non-pickle-able part of the immutable state from self with state and returns the corresponding searcher clone. Afterwards, self is not used anymore.

If the searcher object as such is already pickle-able, then state is already the new searcher object, and the default is just returning it. In this default, self is ignored.

- Parameters

state – See above

- Returns

New searcher object

-

configure_scheduler(scheduler)¶ Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves. This method has to be called before the searcher can be used.

The implementation here sets _reward_attribute for schedulers which specify it.

- Args:

- scheduler: TaskScheduler

Scheduler the searcher is used with.

-

cumulative_profile_record()¶ If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

-

dataset_size()¶ - Returns

Size of dataset a model is fitted to, or 0 if no model is fitted to data

-

property

debug_log¶ Some BaseSearcher subclasses support writing a debug log, using DebugLogPrinter. See RandomSearcher for an example.

- Returns

DebugLogPrinter; or None (not supported)

-

evaluation_failed(config, **kwargs)¶ Called by scheduler if an evaluation job for config failed. The searcher should react appropriately (e.g., remove pending evaluations for this config, and blacklist config).

-

get_best_config()¶ Returns the best configuration found so far.

-

get_best_config_reward()¶ Returns the best configuration found so far, as well as the reward associated with this best config.

-

get_best_reward()¶ Calculates the reward (i.e. validation performance) produced by training under the best configuration identified so far. Assumes higher reward values indicate better performance.

-

get_reward(config)¶ Calculates the reward (i.e. validation performance) produced by training with the given configuration.

-

get_state()¶ Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

The state returned here must be pickle-able. If the searcher object is pickle-able, the default is returning self.

- Returns

Pickle-able mutable state of searcher

-

model_parameters()¶ - Returns

Dictionary with current model (hyper)parameter values if this is supported; otherwise empty

-

register_pending(config, milestone=None)¶ Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending. For multi-fidelity schedulers, milestone is the next milestone the evaluation will attend, so that model registers (config, milestone) as pending. In general, the searcher may assume that update is called with that config at a later time.

-

remove_case(config, **kwargs)¶ Remove data case previously appended by update

For searchers which maintain the dataset of all cases (reports) passed to update, this method allows to remove one case from the dataset.

-

update(config, **kwargs)¶ Update the searcher with the newest metric report

kwargs must include the reward (key == reward_attribute). For multi-fidelity schedulers (e.g., Hyperband), intermediate results are also reported. In this case, kwargs must also include the resource (key == resource_attribute). We can also assume that if register_pending(config, …) is received, then later on, the searcher receives update(config, …) with milestone as resource.

Note that for Hyperband scheduling, update is also called for intermediate results. _results is updated in any case, if the new reward value is larger than the previously recorded one. This implies that the best value for a config (in _results) could be obtained for an intermediate resource, not the final one (virtue of early stopping). Full details can be reconstruction from training_history of the scheduler.

RLSearcher¶

-

class

autogluon.core.searcher.RLSearcher(kwspaces, ctx=None, controller_type='lstm', **kwargs)[source]¶ Reinforcement Learning Searcher for ConfigSpace

- Parameters

- kwspaceskeyword search spaces

The keyword spaces automatically generated by

autogluon.args()

Examples

>>> import autogluon.core as ag >>> @ag.args( ... lr=ag.space.Real(1e-3, 1e-2, log=True), ... wd=ag.space.Real(1e-3, 1e-2)) >>> def train_fn(args, reporter): ... pass >>> searcher = RLSearcher(train_fn.kwspaces) >>> searcher.get_config()

- Attributes

debug_logSome BaseSearcher subclasses support writing a debug log, using DebugLogPrinter.

Methods

clone_from_state(state)Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

configure_scheduler(scheduler)Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves.

If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

- return

Size of dataset a model is fitted to, or 0 if no model is

evaluation_failed(config, **kwargs)Called by scheduler if an evaluation job for config failed.

Returns the best configuration found so far.

Returns the best configuration found so far, as well as the reward associated with this best config.

Calculates the reward (i.e.

get_config(**kwargs)Function to sample a new configuration

get_reward(config)Calculates the reward (i.e.

Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

- return

Dictionary with current model (hyper)parameter values if

register_pending(config[, milestone])Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending.

remove_case(config, **kwargs)Remove data case previously appended by update

update(config, **kwargs)Update the searcher with the newest metric report

load_state_dict

state_dict

-

clone_from_state(state)¶ Together with get_state, this is needed in order to store and re-create the mutable state of the searcher.

Given state as returned by get_state, this method combines the non-pickle-able part of the immutable state from self with state and returns the corresponding searcher clone. Afterwards, self is not used anymore.

If the searcher object as such is already pickle-able, then state is already the new searcher object, and the default is just returning it. In this default, self is ignored.

- Parameters

state – See above

- Returns

New searcher object

-

configure_scheduler(scheduler)¶ Some searchers need to obtain information from the scheduler they are used with, in order to configure themselves. This method has to be called before the searcher can be used.

The implementation here sets _reward_attribute for schedulers which specify it.

- Args:

- scheduler: TaskScheduler

Scheduler the searcher is used with.

-

cumulative_profile_record()¶ If profiling is supported and active, the searcher accumulates profiling information over get_config calls, the corresponding dict is returned here.

-

dataset_size()¶ - Returns

Size of dataset a model is fitted to, or 0 if no model is fitted to data

-

property

debug_log¶ Some BaseSearcher subclasses support writing a debug log, using DebugLogPrinter. See RandomSearcher for an example.

- Returns

DebugLogPrinter; or None (not supported)

-

evaluation_failed(config, **kwargs)¶ Called by scheduler if an evaluation job for config failed. The searcher should react appropriately (e.g., remove pending evaluations for this config, and blacklist config).

-

get_best_config()¶ Returns the best configuration found so far.

-

get_best_config_reward()¶ Returns the best configuration found so far, as well as the reward associated with this best config.

-

get_best_reward()¶ Calculates the reward (i.e. validation performance) produced by training under the best configuration identified so far. Assumes higher reward values indicate better performance.

-

get_config(**kwargs)[source]¶ Function to sample a new configuration

This function is called inside TaskScheduler to query a new configuration

Args: kwargs:

Extra information may be passed from scheduler to searcher

- returns: (config, info_dict)

must return a valid configuration and a (possibly empty) info dict

-

get_reward(config)¶ Calculates the reward (i.e. validation performance) produced by training with the given configuration.

-

get_state()¶ Together with clone_from_state, this is needed in order to store and re-create the mutable state of the searcher.

The state returned here must be pickle-able. If the searcher object is pickle-able, the default is returning self.

- Returns

Pickle-able mutable state of searcher

-

model_parameters()¶ - Returns

Dictionary with current model (hyper)parameter values if this is supported; otherwise empty

-

register_pending(config, milestone=None)¶ Signals to searcher that evaluation for config has started, but not yet finished, which allows model-based searchers to register this evaluation as pending. For multi-fidelity schedulers, milestone is the next milestone the evaluation will attend, so that model registers (config, milestone) as pending. In general, the searcher may assume that update is called with that config at a later time.

-

remove_case(config, **kwargs)¶ Remove data case previously appended by update

For searchers which maintain the dataset of all cases (reports) passed to update, this method allows to remove one case from the dataset.

-

update(config, **kwargs)¶ Update the searcher with the newest metric report

kwargs must include the reward (key == reward_attribute). For multi-fidelity schedulers (e.g., Hyperband), intermediate results are also reported. In this case, kwargs must also include the resource (key == resource_attribute). We can also assume that if register_pending(config, …) is received, then later on, the searcher receives update(config, …) with milestone as resource.

Note that for Hyperband scheduling, update is also called for intermediate results. _results is updated in any case, if the new reward value is larger than the previously recorded one. This implies that the best value for a config (in _results) could be obtained for an intermediate resource, not the final one (virtue of early stopping). Full details can be reconstruction from training_history of the scheduler.