AutoMM Detection - Quick Start with Foundation Model on Open Vocabulary Detection (OVD)#

In this section, our goal is to use a foundation model in object detection to detect novel classes defined by an unbounded (open) vocabulary.

Setting up the imports#

To start, let’s import MultiModalPredictor, and also make sure groundingdino is installed:

try:

import groundingdino

except ImportError:

try:

from pip._internal import main as pipmain

except ImportError:

from pip import main as pipmain # for old pip version

pipmain(['install', '--user', 'git+https://github.com/FANGAreNotGnu/GroundingDINO.git']) # equals to "!pip install git+https://github.com/IDEA-Research/GroundingDINO.git"

Prepare sample image#

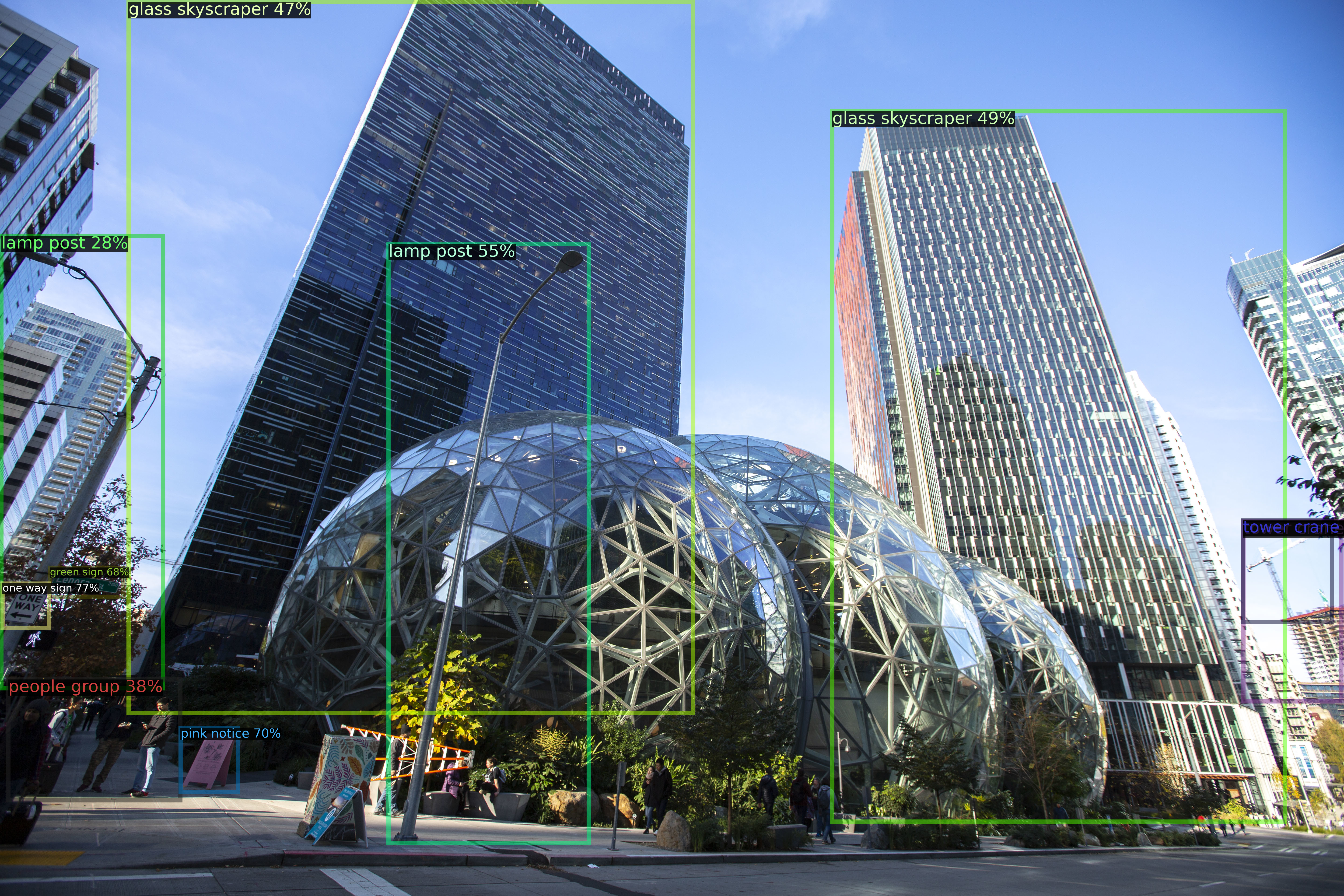

Let’s use an image of Seattle’s street view to demo:

from IPython.display import Image, display

from autogluon.multimodal import download

sample_image_url = "https://live.staticflickr.com/65535/49004630088_d15a9be500_6k.jpg"

sample_image_path = download(sample_image_url)

display(Image(filename=sample_image_path))

Downloading 49004630088_d15a9be500_6k.jpg from https://live.staticflickr.com/65535/49004630088_d15a9be500_6k.jpg...

Creating the MultiModalPredictor#

We create the MultiModalPredictor and specify the problem_type to "open_vocabulary_object_detection".

We set the preset as "best_quality", which uses a SwinB as backbone. This preset gives us higher accuracy for detection.

We also provide presets "high_quality" and "medium_quality" with SwinT as backbone, faster but also with lower performance.

# Init predictor

predictor = MultiModalPredictor(problem_type="open_vocabulary_object_detection", presets = "best_quality")

Inference#

To run inference on the image, perform:

pred = predictor.predict(

{

"image": [sample_image_path],

"prompt": ["Pink notice. Green sign. One Way sign. People group. Tower crane in construction. Lamp post. Glass skyscraper."],

},

as_pandas=True,

)

print(pred)

Downloading groundingdino_swinb_cogcoor.pth from https://github.com/IDEA-Research/GroundingDINO/releases/download/v0.1.0-alpha2/groundingdino_swinb_cogcoor.pth...

final text_encoder_type: bert-base-uncased

final text_encoder_type: bert-base-uncased

image \

0 49004630088_d15a9be500_6k.jpg

bboxes

0 [{'bbox': [11.342733869329095, 2500.7779793869...

/home/ci/opt/venv/lib/python3.10/site-packages/torch/functional.py:504: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:3483.)

return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined]

/home/ci/opt/venv/lib/python3.10/site-packages/transformers/modeling_utils.py:881: FutureWarning: The `device` argument is deprecated and will be removed in v5 of Transformers.

warnings.warn(

/home/ci/opt/venv/lib/python3.10/site-packages/torch/utils/checkpoint.py:31: UserWarning: None of the inputs have requires_grad=True. Gradients will be None

warnings.warn("None of the inputs have requires_grad=True. Gradients will be None")

The output pred is a pandas DataFrame that has two columns, image and bboxes.

In image, each row contains the image path

In bboxes, each row is a list of dictionaries, each one representing the prediction for an object in the image: {"class": <predicted_class_name>, "bbox": [x1, y1, x2, y2], "score": <confidence_score>}, for example:

print(pred["bboxes"][0][0])

{'bbox': array([ 11.34273387, 2500.77797939, 211.40461822, 2690.81535338]), 'class': 'one way sign', 'score': array(0.7678639, dtype=float32)}

!pip install opencv-python

Requirement already satisfied: opencv-python in /home/ci/opt/venv/lib/python3.10/site-packages (4.9.0.80)

Requirement already satisfied: numpy>=1.21.2 in /home/ci/opt/venv/lib/python3.10/site-packages (from opencv-python) (1.26.4)

To visualize results, run the following:

from autogluon.multimodal.utils import ObjectDetectionVisualizer

conf_threshold = 0.2 # Specify a confidence threshold to filter out unwanted boxes

image_result = pred.iloc[0]

img_path = image_result.image # Select an image to visualize

visualizer = ObjectDetectionVisualizer(img_path) # Initialize the Visualizer

out = visualizer.draw_instance_predictions(image_result, conf_threshold=conf_threshold) # Draw detections

visualized = out.get_image() # Get the visualized image

from PIL import Image

from IPython.display import display

img = Image.fromarray(visualized, 'RGB')

display(img)

Other Examples#

You may go to AutoMM Examples to explore other examples about AutoMM.

Customization#

To learn how to customize AutoMM, please refer to Customize AutoMM.

Citation#

@misc{liu2023grounding,

title={Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection},

author={Shilong Liu and Zhaoyang Zeng and Tianhe Ren and Feng Li and Hao Zhang and Jie Yang and Chunyuan Li and Jianwei Yang and Hang Su and Jun Zhu and Lei Zhang},

year={2023},

eprint={2303.05499},

archivePrefix={arXiv},

primaryClass={cs.CV}

}