AutoMM Detection - High Performance Finetune on COCO Format Dataset#

In this section, our goal is to fast finetune and evaluate a pretrained model

on Pothole dataset in COCO format.

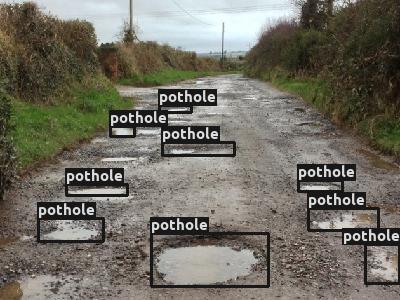

Pothole is a single object, i.e. pothole, detection dataset, containing 665 images with bounding box annotations

for the creation of detection models and can work as POC/POV for the maintenance of roads.

See AutoMM Detection - Prepare Pothole Dataset for how to prepare Pothole dataset.

To start, let’s import MultiModalPredictor:

from autogluon.multimodal import MultiModalPredictor

Make sure mmcv and mmdet are installed:

!mim install mmcv

!pip install "mmdet>=3.0.0"

Looking in links: https://download.openmmlab.com/mmcv/dist/cu117/torch1.13.0/index.html

Requirement already satisfied: mmcv in /home/ci/opt/venv/lib/python3.8/site-packages (2.0.0)

Requirement already satisfied: addict in /home/ci/opt/venv/lib/python3.8/site-packages (from mmcv) (2.4.0)

Requirement already satisfied: mmengine>=0.2.0 in /home/ci/opt/venv/lib/python3.8/site-packages (from mmcv) (0.7.4)

Requirement already satisfied: numpy in /home/ci/opt/venv/lib/python3.8/site-packages (from mmcv) (1.24.3)

Requirement already satisfied: packaging in /home/ci/opt/venv/lib/python3.8/site-packages (from mmcv) (23.1)

Requirement already satisfied: Pillow in /home/ci/opt/venv/lib/python3.8/site-packages (from mmcv) (9.5.0)

Requirement already satisfied: pyyaml in /home/ci/opt/venv/lib/python3.8/site-packages (from mmcv) (5.4.1)

Requirement already satisfied: yapf in /home/ci/opt/venv/lib/python3.8/site-packages (from mmcv) (0.40.0)

Requirement already satisfied: opencv-python>=3 in /home/ci/opt/venv/lib/python3.8/site-packages (from mmcv) (4.7.0.72)

Requirement already satisfied: matplotlib in /home/ci/opt/venv/lib/python3.8/site-packages (from mmengine>=0.2.0->mmcv) (3.6.3)

Requirement already satisfied: rich in /home/ci/opt/venv/lib/python3.8/site-packages (from mmengine>=0.2.0->mmcv) (13.4.2)

Requirement already satisfied: termcolor in /home/ci/opt/venv/lib/python3.8/site-packages (from mmengine>=0.2.0->mmcv) (2.3.0)

Requirement already satisfied: importlib-metadata>=6.6.0 in /home/ci/opt/venv/lib/python3.8/site-packages (from yapf->mmcv) (6.6.0)

Requirement already satisfied: platformdirs>=3.5.1 in /home/ci/opt/venv/lib/python3.8/site-packages (from yapf->mmcv) (3.5.3)

Requirement already satisfied: tomli>=2.0.1 in /home/ci/opt/venv/lib/python3.8/site-packages (from yapf->mmcv) (2.0.1)

Requirement already satisfied: zipp>=0.5 in /home/ci/opt/venv/lib/python3.8/site-packages (from importlib-metadata>=6.6.0->yapf->mmcv) (3.15.0)

Requirement already satisfied: contourpy>=1.0.1 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmengine>=0.2.0->mmcv) (1.1.0)

Requirement already satisfied: cycler>=0.10 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmengine>=0.2.0->mmcv) (0.11.0)

Requirement already satisfied: fonttools>=4.22.0 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmengine>=0.2.0->mmcv) (4.40.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmengine>=0.2.0->mmcv) (1.4.4)

Requirement already satisfied: pyparsing>=2.2.1 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmengine>=0.2.0->mmcv) (3.0.9)

Requirement already satisfied: python-dateutil>=2.7 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmengine>=0.2.0->mmcv) (2.8.2)

Requirement already satisfied: markdown-it-py>=2.2.0 in /home/ci/opt/venv/lib/python3.8/site-packages (from rich->mmengine>=0.2.0->mmcv) (2.2.0)

Requirement already satisfied: pygments<3.0.0,>=2.13.0 in /home/ci/opt/venv/lib/python3.8/site-packages (from rich->mmengine>=0.2.0->mmcv) (2.15.1)

Requirement already satisfied: typing-extensions<5.0,>=4.0.0 in /home/ci/opt/venv/lib/python3.8/site-packages (from rich->mmengine>=0.2.0->mmcv) (4.6.3)

Requirement already satisfied: mdurl~=0.1 in /home/ci/opt/venv/lib/python3.8/site-packages (from markdown-it-py>=2.2.0->rich->mmengine>=0.2.0->mmcv) (0.1.2)

Requirement already satisfied: six>=1.5 in /home/ci/opt/venv/lib/python3.8/site-packages (from python-dateutil>=2.7->matplotlib->mmengine>=0.2.0->mmcv) (1.16.0)

Requirement already satisfied: mmdet>=3.0.0 in /home/ci/opt/venv/lib/python3.8/site-packages (3.0.0)

Requirement already satisfied: matplotlib in /home/ci/opt/venv/lib/python3.8/site-packages (from mmdet>=3.0.0) (3.6.3)

Requirement already satisfied: numpy in /home/ci/opt/venv/lib/python3.8/site-packages (from mmdet>=3.0.0) (1.24.3)

Requirement already satisfied: pycocotools in /home/ci/opt/venv/lib/python3.8/site-packages (from mmdet>=3.0.0) (2.0.6)

Requirement already satisfied: scipy in /home/ci/opt/venv/lib/python3.8/site-packages (from mmdet>=3.0.0) (1.10.1)

Requirement already satisfied: shapely in /home/ci/opt/venv/lib/python3.8/site-packages (from mmdet>=3.0.0) (2.0.1)

Requirement already satisfied: six in /home/ci/opt/venv/lib/python3.8/site-packages (from mmdet>=3.0.0) (1.16.0)

Requirement already satisfied: terminaltables in /home/ci/opt/venv/lib/python3.8/site-packages (from mmdet>=3.0.0) (3.1.10)

Requirement already satisfied: contourpy>=1.0.1 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmdet>=3.0.0) (1.1.0)

Requirement already satisfied: cycler>=0.10 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmdet>=3.0.0) (0.11.0)

Requirement already satisfied: fonttools>=4.22.0 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmdet>=3.0.0) (4.40.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmdet>=3.0.0) (1.4.4)

Requirement already satisfied: packaging>=20.0 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmdet>=3.0.0) (23.1)

Requirement already satisfied: pillow>=6.2.0 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmdet>=3.0.0) (9.5.0)

Requirement already satisfied: pyparsing>=2.2.1 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmdet>=3.0.0) (3.0.9)

Requirement already satisfied: python-dateutil>=2.7 in /home/ci/opt/venv/lib/python3.8/site-packages (from matplotlib->mmdet>=3.0.0) (2.8.2)

And also import some other packages that will be used in this tutorial:

import os

import time

from autogluon.core.utils.loaders import load_zip

We have the sample dataset ready in the cloud. Let’s download it:

zip_file = "https://automl-mm-bench.s3.amazonaws.com/object_detection/dataset/pothole.zip"

download_dir = "./pothole"

load_zip.unzip(zip_file, unzip_dir=download_dir)

data_dir = os.path.join(download_dir, "pothole")

train_path = os.path.join(data_dir, "Annotations", "usersplit_train_cocoformat.json")

val_path = os.path.join(data_dir, "Annotations", "usersplit_val_cocoformat.json")

test_path = os.path.join(data_dir, "Annotations", "usersplit_test_cocoformat.json")

While using COCO format dataset, the input is the json annotation file of the dataset split.

In this example, usersplit_train_cocoformat.json is the annotation file of the train split.

usersplit_val_cocoformat.json is the annotation file of the validation split.

And usersplit_test_cocoformat.json is the annotation file of the test split.

We select the VFNet with ResNet-50 as backbone, Feature Pyramid Network (FPN) as neck, and input resolution is 640x640, pretrained on COCO dataset. (The neck of the object detector refers to the additional layers existing between the backbone and the head. Their role is to collect feature maps from different stages.) With this setting, it sacrifices training and inference time, and also requires much more GPU memory, but the performance is high.

We use val_metric = map, i.e., mean average precision in COCO standard as our validation metric.

In previous section AutoMM Detection - Fast Finetune on COCO Format Dataset,

we did not specify the validation metric and by default the validation loss is used as validation metric.

Using validation loss is much faster but using mean average precision gives the best performance.

And we use all the GPUs (if any):

checkpoint_name = "vfnet_r50-mdconv-c3-c5_fpn_ms-2x_coco"

num_gpus = -1 # use all GPUs

val_metric = "map"

We create the MultiModalPredictor with selected checkpoint name, val_metric, and number of GPUs.

We need to specify the problem_type to "object_detection",

and also provide a sample_data_path for the predictor to infer the categories of the dataset.

Here we provide the train_path, and it also works using any other split of this dataset.

predictor = MultiModalPredictor(

hyperparameters={

"model.mmdet_image.checkpoint_name": checkpoint_name,

"env.num_gpus": num_gpus,

"optimization.val_metric": val_metric,

},

problem_type="object_detection",

sample_data_path=train_path,

)

We used 1e-4 for Yolo V3 in previous tutorial,

but set the learning rate to be 5e-6 here,

because larger model always prefers smaller learning rate.

Note that we use a two-stage learning rate option during finetuning by default,

and the model head will have 100x learning rate.

Using a two-stage learning rate with high learning rate only on head layers makes

the model converge faster during finetuning. It usually gives better performance as well,

especially on small datasets with hundreds or thousands of images.

We also set the batch_size to be 2, because this model is too huge to run with larger batch size.

We also compute the time of the fit process here for better understanding the speed.

We only set the number of epochs to be 1 for a quick demonstration,

and to seriously finetune the model on this dataset we will need to set this to 20 or more.

import time

start = time.time()

predictor.fit(

train_path,

hyperparameters={

"optimization.learning_rate": 5e-6, # we use two stage and detection head has 100x lr

"optimization.max_epochs": 1,

"optimization.check_val_every_n_epoch": 1, # make sure there is at least one validation

"env.per_gpu_batch_size": 2, # decrease it when model is large

},

)

end = time.time()

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

Using default root folder: ./pothole/pothole/Annotations/... Specify `root=...` if you feel it is wrong...

No path specified. Models will be saved in: "AutogluonModels/ag-20230614_072950/"

Global seed set to 0

AutoMM starts to create your model. ✨

- AutoGluon version is 0.7.1b20230614.

- Pytorch version is 1.13.1+cu117.

- Model will be saved to "/home/ci/autogluon/docs/tutorials/multimodal/object_detection/finetune/AutogluonModels/ag-20230614_072950".

- Validation metric is "map".

- To track the learning progress, you can open a terminal and launch Tensorboard:

```shell

# Assume you have installed tensorboard

tensorboard --logdir /home/ci/autogluon/docs/tutorials/multimodal/object_detection/finetune/AutogluonModels/ag-20230614_072950

```

Enjoy your coffee, and let AutoMM do the job ☕☕☕ Learn more at https://auto.gluon.ai

╭─────────────────────────────── Traceback (most recent call last) ────────────────────────────────╮ │ in <module>:3 │ │ │ │ 1 import time │ │ 2 start = time.time() │ │ ❱ 3 predictor.fit( │ │ 4 │ train_path, │ │ 5 │ hyperparameters={ │ │ 6 │ │ "optimization.learning_rate": 5e-6, # we use two stage and detection head has 10 │ │ │ │ /home/ci/autogluon/multimodal/src/autogluon/multimodal/predictor.py:864 in fit │ │ │ │ 861 │ │ │ ) │ │ 862 │ │ │ return predictor │ │ 863 │ │ │ │ ❱ 864 │ │ self._fit(**_fit_args) │ │ 865 │ │ training_end = time.time() │ │ 866 │ │ self._total_train_time = training_end - training_start │ │ 867 │ │ │ │ /home/ci/autogluon/multimodal/src/autogluon/multimodal/predictor.py:1102 in _fit │ │ │ │ 1099 │ │ pl.seed_everything(seed, workers=True) │ │ 1100 │ │ # TODO(?) We should have a separate "_pre_training_event()" for logging messages │ │ 1101 │ │ logger.info(get_fit_start_message(save_path, validation_metric_name)) │ │ ❱ 1102 │ │ config = get_config( │ │ 1103 │ │ │ problem_type=self._problem_type, │ │ 1104 │ │ │ presets=presets, │ │ 1105 │ │ │ config=config, │ │ │ │ /home/ci/autogluon/multimodal/src/autogluon/multimodal/utils/config.py:210 in get_config │ │ │ │ 207 │ │ # remove `model.names` from overrides since it's already applied. │ │ 208 │ │ overrides.pop("model.names", None) │ │ 209 │ │ # apply the user-provided overrides │ │ ❱ 210 │ │ config = apply_omegaconf_overrides(config, overrides=overrides, check_key_exist= │ │ 211 │ verify_model_names(config.model) │ │ 212 │ return config │ │ 213 │ │ │ │ /home/ci/autogluon/multimodal/src/autogluon/multimodal/utils/config.py:518 in │ │ apply_omegaconf_overrides │ │ │ │ 515 │ if check_key_exist: │ │ 516 │ │ for ele in overrides.items(): │ │ 517 │ │ │ if not _check_exist_dotlist(conf, ele[0]): │ │ ❱ 518 │ │ │ │ raise KeyError( │ │ 519 │ │ │ │ │ f'"{ele[0]}" is not found in the config. You may need to check the o │ │ 520 │ │ │ │ │ f"overrides={overrides}" │ │ 521 │ │ │ │ ) │ ╰──────────────────────────────────────────────────────────────────────────────────────────────────╯ KeyError: '"optimization.val_metric" is not found in the config. You may need to check the overrides. overrides={\'model.mmdet_image.checkpoint_name\': \'vfnet_r50-mdconv-c3-c5_fpn_ms-2x_coco\', \'env.num_gpus\': -1, \'optimization.val_metric\': \'map\', \'optimization.learning_rate\': 5e-06, \'optimization.max_epochs\': 1, \'optimization.check_val_every_n_epoch\': 1, \'env.per_gpu_batch_size\': 2}'

Print out the time and we can see that it takes a long time even for one epoch.

print("This finetuning takes %.2f seconds." % (end - start))

To get a model with reasonable performance, you will need to finetune the model with more epochs.

We set max_epochs to 50 and trained a model offline. And we uploaded it to AWS S3.

To load and check the result:

# Load Trained Predictor from S3

zip_file = "https://automl-mm-bench.s3.amazonaws.com/object_detection/checkpoints/pothole_AP50_718.zip"

download_dir = "./pothole_AP50_718"

load_zip.unzip(zip_file, unzip_dir=download_dir)

better_predictor = MultiModalPredictor.load("./pothole_AP50_718/AutogluonModels/ag-20221123_021130")

better_predictor.set_num_gpus(1)

# Evaluate new predictor

better_predictor.evaluate(test_path)

We can get the prediction on test set:

pred = better_predictor.predict(test_path)

Let’s also visualize the prediction result:

!pip install opencv-python

from autogluon.multimodal.utils import visualize_detection

conf_threshold = 0.25 # Specify a confidence threshold to filter out unwanted boxes

visualization_result_dir = "./" # Use the pwd as result dir to save the visualized image

visualized = visualize_detection(

pred=pred[12:13],

detection_classes=predictor.get_predictor_classes(),

conf_threshold=conf_threshold,

visualization_result_dir=visualization_result_dir,

)

from PIL import Image

from IPython.display import display

img = Image.fromarray(visualized[0][:, :, ::-1], 'RGB')

display(img)

Under this high performance finetune setting, it took a long time to train but reached mAP = 0.450, mAP50 = 0.718!

For how to finetune faster,

see AutoMM Detection - Fast Finetune on COCO Format Dataset, where we finetuned a YOLOv3 model with lower

performance but much faster.

Other Examples#

You may go to AutoMM Examples to explore other examples about AutoMM.

Customization#

To learn how to customize AutoMM, please refer to Customize AutoMM.

Citation#

@article{DBLP:journals/corr/abs-2008-13367,

author = {Haoyang Zhang and

Ying Wang and

Feras Dayoub and

Niko S{\"{u}}nderhauf},

title = {VarifocalNet: An IoU-aware Dense Object Detector},

journal = {CoRR},

volume = {abs/2008.13367},

year = {2020},

url = {https://arxiv.org/abs/2008.13367},

eprinttype = {arXiv},

eprint = {2008.13367},

timestamp = {Wed, 16 Sep 2020 11:20:03 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2008-13367.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}