# Version 1.4.0

We are happy to announce the AutoGluon 1.4.0 release!

AutoGluon 1.4.0 introduces massive new features and improvements to both tabular and time series modules. In particular, we introduce the `extreme` preset to TabularPredictor, which sets a new state of the art for predictive performance **by a massive margin** on datasets with fewer than 30000 samples. We have also added 5 new tabular model families in this release: RealMLP, TabM, TabPFNv2, TabICL, and Mitra. We also release **MLZero 1.0**, aka AutoGluon-Assistant, an end-to-end automated data science agent that brings AutoGluon from 3 lines of code to 0. For more details, refer to the highlights section below.

This release contains [69 commits from 18 contributors](https://github.com/autogluon/autogluon/graphs/contributors?from=5%2F21%2F2025&to=7%2F26%2F2025&type=c)! See the full commit change-log here: https://github.com/autogluon/autogluon/compare/1.3.1...1.4.0

Join the community: [](https://discord.gg/wjUmjqAc2N)

Get the latest updates: [](https://twitter.com/autogluon)

This release supports Python versions 3.9, 3.10, 3.11, and 3.12. Loading models trained on older versions of AutoGluon is not supported. Please re-train models using AutoGluon 1.4.0.

--------

## Spotlight

### AutoGluon Tabular Extreme Preset

AutoGluon 1.4.0 introduces a new tabular preset, `extreme_quality` aka `extreme`.

AutoGluon's [extreme preset](https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets) is **the largest singular improvement to AutoGluon's predictive performance in the history of the package**, even larger than the improvement seen in AutoGluon 1.0 compared to 0.8.

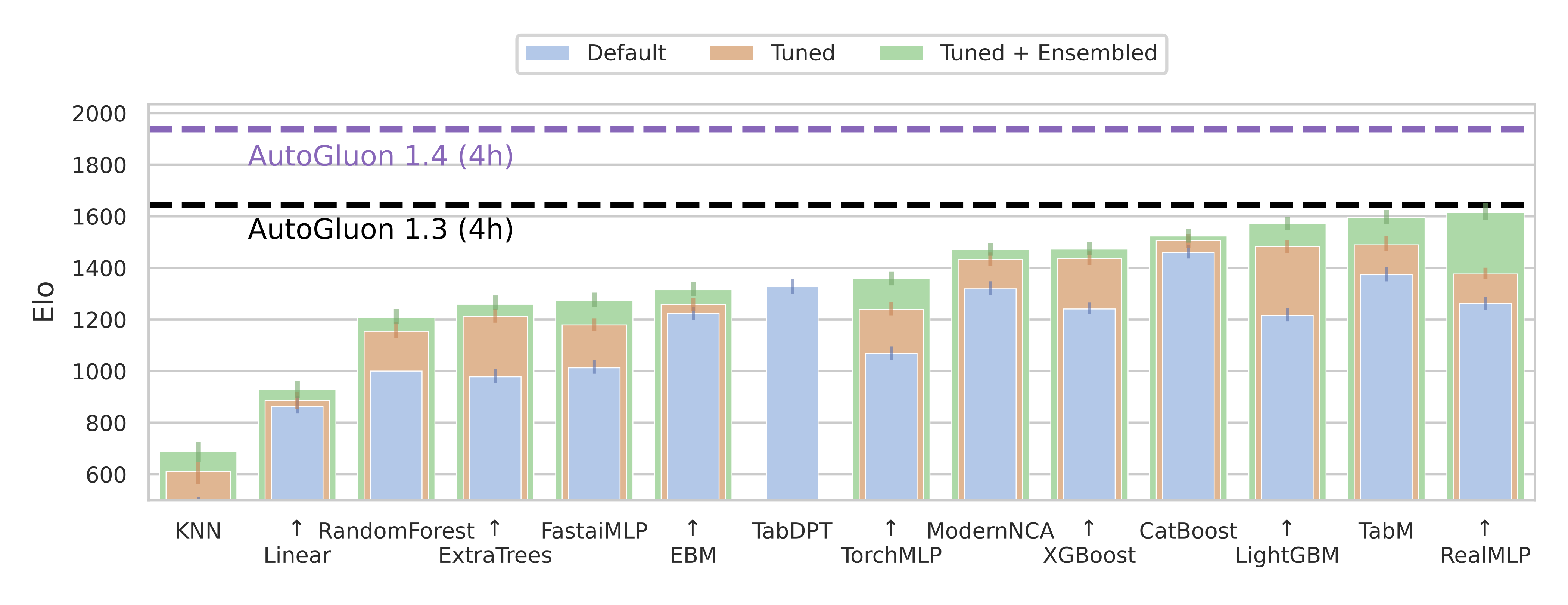

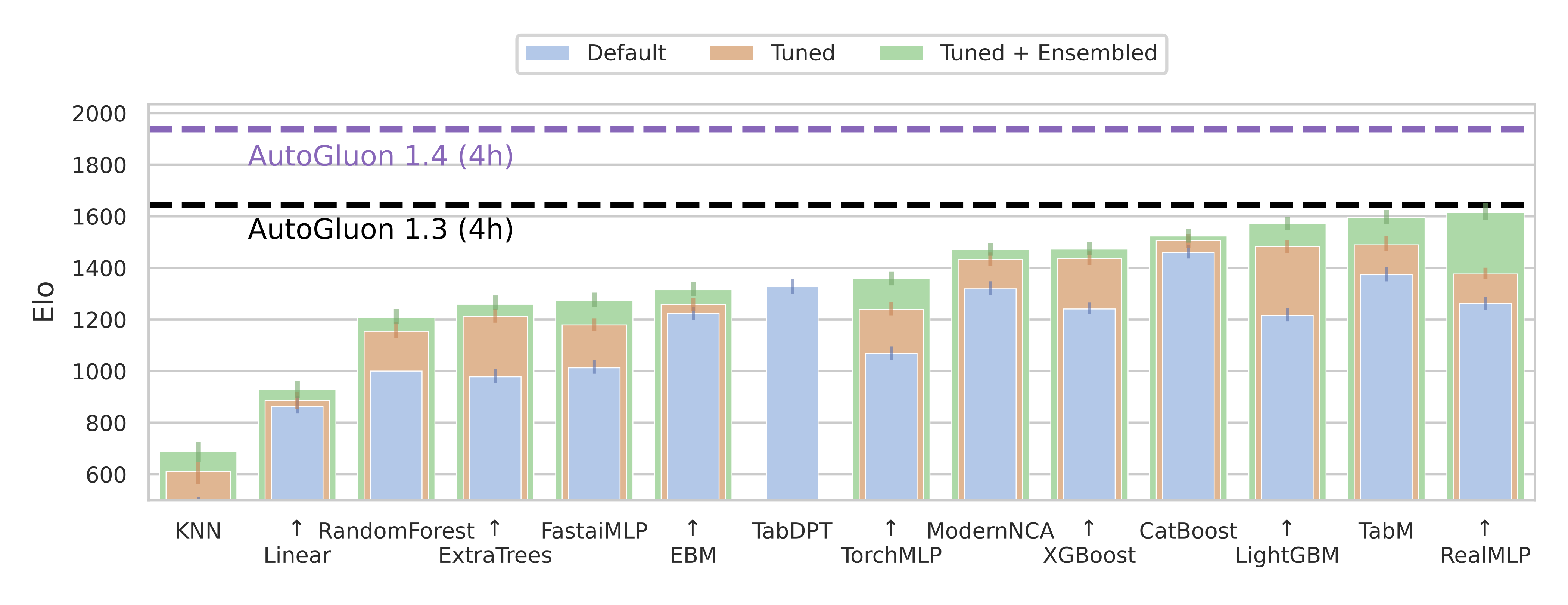

This preset achieves an **88% win-rate** vs Autogluon 1.3 `best_quality` for datasets with fewer than 10000 samples, and a 290 Elo improvement overall on [TabArena](https://tabarena.ai) (shown in the figure above).

Try it out in 3 lines of code:

```python

from autogluon.tabular import TabularPredictor

predictor = TabularPredictor(label="class").fit("train.csv", presets="extreme")

predictions = predictor.predict("test.csv")

```

The `extreme` preset leverages a [new model portfolio](https://github.com/autogluon/autogluon/blob/master/tabular/src/autogluon/tabular/configs/zeroshot/zeroshot_portfolio_2025.py), which is an improved version of the `TabArena ensemble` shown in Figure 6a of the [TabArena paper](https://arxiv.org/abs/2506.16791).

It consists of many new model families added in this release: TabPFNv2, TabICL, Mitra, TabM, as well as tree methods: CatBoost, LightGBM, XGBoost.

This preset is not only more accurate, it also requires much less training time. AutoGluon's `extreme` preset in 5 minutes is able to outperform `best` ran for 4 hours.

In order to get the most out of the `extreme` preset, a CUDA compatible GPU is required, ideally with 32+ GB vRAM.

Note that inference time can be longer than `best`, but with a GPU it is very reasonable.

The `extreme` portfolio is only leveraged for datasets with at most 30000 samples. For larger datasets, we continue to use the `best_quality` portfolio.

The preset requires downloading foundation model weights for TabPFNv2, TabICL, and Mitra during fit. If you don't have an internet connection,

ensure that you pre-download the weights of the models to be able to use them during fit.

This preset is considered experimental for this release, and may change without warning in a future release.

### TabArena and new models: TabPFNv2, TabICL, TabM, RealMLP

🚨What is SOTA on tabular data, really? We are excited to introduce [TabArena](https://tabarena.ai), a living benchmark for machine learning on IID tabular data with:

📊 an online leaderboard accepting submissions

📑 carefully curated datasets (real, predictive, tabular, IID, permissive license)

📈 strong tree-based, deep learning, and foundation models

⚙️ best practices for evaluation (inner CV, outer CV, early stopping)

ℹ️ 𝐎𝐯𝐞𝐫𝐯𝐢𝐞𝐰

Leaderboard: https://tabarena.ai

Paper: https://arxiv.org/abs/2506.16791

Code: https://tabarena.ai/code

💡 𝐌𝐚𝐢𝐧 𝐢𝐧𝐬𝐢𝐠𝐡𝐭𝐬:

➡️ Recent deep learning models, RealMLP and TabM, have marginally overtaken boosted trees with weighted ensembling, although they have slower train+inference times. With defaults or regular tuning, CatBoost takes the #1 spot.

➡️ Foundation models TabPFNv2 and TabICL are only applicable to a subset of datasets, but perform very strongly on these. They have a large inference time and still need tuning/ensembling to get the top spot (for TabPFNv2).

➡️ The winner does NOT take it all. By using a weighted ensemble of different model types from TabArena, we can significantly outperform the current state of the art on tabular data, AutoGluon 1.3.

➡️ These insights have been directly incorporated into the AutoGluon 1.4 release with the [extreme preset](https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets), dramatically advancing the state of the art!

➡️ The models TabPFNv2, TabICL, TabM, and RealMLP have been added to AutoGluon! To use them, run `pip install autogluon[tabarena]` and use the `extreme` preset.

🎯TabArena is a living benchmark. With the community, we will continually update it!

TabArena Authors: [Nick Erickson](https://github.com/Innixma), [Lennart Purucker](https://github.com/LennartPurucker), [Andrej Tschalzev](https://github.com/atschalz), [David Holzmüller](https://github.com/dholzmueller), [Prateek Mutalik Desai](https://github.com/prateekdesai04), [David Salinas](https://github.com/geoalgo), [Frank Hutter](https://github.com/frank-hutter)

### AutoGluon Assistant (MLZero)

> *Multi-Agent System Powered by LLMs for End-to-end Multimodal ML Automation*

We are excited to present the [AutoGluon Assistant](https://github.com/autogluon/autogluon-assistant) 1.0 release. Level up from v0.1: v1.0 expands beyond tabular data to robustly support any and many modalities, including **image, text, tabular, audio and mixed-data pipelines**. This aligns precisely with the MLZero vision of comprehensive, modality-agnostic ML automation.

AutoGluon Assistant v1.0 is now synonymous with **"MLZero: A Multi-Agent System for End-to-end Machine Learning Automation"** ([arXiv:2505.13941](https://arxiv.org/abs/2505.13941)), the end-to-end, zero-human-intervention AutoML agent framework for multimodal data. Built on a novel **multi-agent architecture** using LLMs, MLZero handles perception, memory (semantic & episodic), code generation, execution, and iterative debugging — seamlessly transforming raw multimodal inputs into high-quality ML/DL pipelines.

- **No-code**: Users define tasks purely through natural language ("classify images of cats vs dogs with custom labels"), and MLZero delivers complete solutions with zero manual configuration or technical expertise required.

- **Built on proven foundations**: MLZero generates code using established, high-performance ML libraries rather than reinventing the wheel, ensuring robust solutions while maintaining the flexibility to easily integrate new libraries as they emerge.

- **Research-grade performance**: MLZero is extensively validated across 25 challenging tasks spanning diverse data modalities, MLZero outperforms the competing methods by a large margin with a success rate of 0.92 (+263.6\%) and an average rank of 2.42.

AutoGluon 1.4.0 introduces a new tabular preset, `extreme_quality` aka `extreme`.

AutoGluon's [extreme preset](https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets) is **the largest singular improvement to AutoGluon's predictive performance in the history of the package**, even larger than the improvement seen in AutoGluon 1.0 compared to 0.8.

This preset achieves an **88% win-rate** vs Autogluon 1.3 `best_quality` for datasets with fewer than 10000 samples, and a 290 Elo improvement overall on [TabArena](https://tabarena.ai) (shown in the figure above).

Try it out in 3 lines of code:

```python

from autogluon.tabular import TabularPredictor

predictor = TabularPredictor(label="class").fit("train.csv", presets="extreme")

predictions = predictor.predict("test.csv")

```

The `extreme` preset leverages a [new model portfolio](https://github.com/autogluon/autogluon/blob/master/tabular/src/autogluon/tabular/configs/zeroshot/zeroshot_portfolio_2025.py), which is an improved version of the `TabArena ensemble` shown in Figure 6a of the [TabArena paper](https://arxiv.org/abs/2506.16791).

It consists of many new model families added in this release: TabPFNv2, TabICL, Mitra, TabM, as well as tree methods: CatBoost, LightGBM, XGBoost.

This preset is not only more accurate, it also requires much less training time. AutoGluon's `extreme` preset in 5 minutes is able to outperform `best` ran for 4 hours.

In order to get the most out of the `extreme` preset, a CUDA compatible GPU is required, ideally with 32+ GB vRAM.

Note that inference time can be longer than `best`, but with a GPU it is very reasonable.

The `extreme` portfolio is only leveraged for datasets with at most 30000 samples. For larger datasets, we continue to use the `best_quality` portfolio.

The preset requires downloading foundation model weights for TabPFNv2, TabICL, and Mitra during fit. If you don't have an internet connection,

ensure that you pre-download the weights of the models to be able to use them during fit.

This preset is considered experimental for this release, and may change without warning in a future release.

### TabArena and new models: TabPFNv2, TabICL, TabM, RealMLP

🚨What is SOTA on tabular data, really? We are excited to introduce [TabArena](https://tabarena.ai), a living benchmark for machine learning on IID tabular data with:

📊 an online leaderboard accepting submissions

📑 carefully curated datasets (real, predictive, tabular, IID, permissive license)

📈 strong tree-based, deep learning, and foundation models

⚙️ best practices for evaluation (inner CV, outer CV, early stopping)

ℹ️ 𝐎𝐯𝐞𝐫𝐯𝐢𝐞𝐰

Leaderboard: https://tabarena.ai

Paper: https://arxiv.org/abs/2506.16791

Code: https://tabarena.ai/code

💡 𝐌𝐚𝐢𝐧 𝐢𝐧𝐬𝐢𝐠𝐡𝐭𝐬:

➡️ Recent deep learning models, RealMLP and TabM, have marginally overtaken boosted trees with weighted ensembling, although they have slower train+inference times. With defaults or regular tuning, CatBoost takes the #1 spot.

➡️ Foundation models TabPFNv2 and TabICL are only applicable to a subset of datasets, but perform very strongly on these. They have a large inference time and still need tuning/ensembling to get the top spot (for TabPFNv2).

➡️ The winner does NOT take it all. By using a weighted ensemble of different model types from TabArena, we can significantly outperform the current state of the art on tabular data, AutoGluon 1.3.

➡️ These insights have been directly incorporated into the AutoGluon 1.4 release with the [extreme preset](https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets), dramatically advancing the state of the art!

➡️ The models TabPFNv2, TabICL, TabM, and RealMLP have been added to AutoGluon! To use them, run `pip install autogluon[tabarena]` and use the `extreme` preset.

🎯TabArena is a living benchmark. With the community, we will continually update it!

TabArena Authors: [Nick Erickson](https://github.com/Innixma), [Lennart Purucker](https://github.com/LennartPurucker), [Andrej Tschalzev](https://github.com/atschalz), [David Holzmüller](https://github.com/dholzmueller), [Prateek Mutalik Desai](https://github.com/prateekdesai04), [David Salinas](https://github.com/geoalgo), [Frank Hutter](https://github.com/frank-hutter)

### AutoGluon Assistant (MLZero)

> *Multi-Agent System Powered by LLMs for End-to-end Multimodal ML Automation*

We are excited to present the [AutoGluon Assistant](https://github.com/autogluon/autogluon-assistant) 1.0 release. Level up from v0.1: v1.0 expands beyond tabular data to robustly support any and many modalities, including **image, text, tabular, audio and mixed-data pipelines**. This aligns precisely with the MLZero vision of comprehensive, modality-agnostic ML automation.

AutoGluon Assistant v1.0 is now synonymous with **"MLZero: A Multi-Agent System for End-to-end Machine Learning Automation"** ([arXiv:2505.13941](https://arxiv.org/abs/2505.13941)), the end-to-end, zero-human-intervention AutoML agent framework for multimodal data. Built on a novel **multi-agent architecture** using LLMs, MLZero handles perception, memory (semantic & episodic), code generation, execution, and iterative debugging — seamlessly transforming raw multimodal inputs into high-quality ML/DL pipelines.

- **No-code**: Users define tasks purely through natural language ("classify images of cats vs dogs with custom labels"), and MLZero delivers complete solutions with zero manual configuration or technical expertise required.

- **Built on proven foundations**: MLZero generates code using established, high-performance ML libraries rather than reinventing the wheel, ensuring robust solutions while maintaining the flexibility to easily integrate new libraries as they emerge.

- **Research-grade performance**: MLZero is extensively validated across 25 challenging tasks spanning diverse data modalities, MLZero outperforms the competing methods by a large margin with a success rate of 0.92 (+263.6\%) and an average rank of 2.42.

| Dataset | Ours | Codex CLI | Codex CLI (+reasoning) | AIDE | DS-Agent | AK |

|-------------|--------------------------|---------------|---------------|----------|--------------|--------|

| **Avg. Rank ↓** | **2.42** | 8.04 | 5.76 | 6.16 | 8.26 | 8.28 |

| **Rel. Time ↓** | 1.0 | 0.15 | 0.23 | 2.83 | N/A | 4.82 |

| **Success ↑** | **92.0%** | 14.7% | 69.3% | 25.3% | 13.3% | 14.7% |

- **Modular and extensible architecture**: We separate the design and implementation of each agent and prompts for different purposes, with a centralized manager coordinating them. This makes adding or editing agents, prompts, and workflows straightforward and intuitive for future development.

We’re also excited to introduce the newly redesigned **WebUI** in v1.0, now with a streamlined chatbot-style interface that makes interacting with MLZero intuitive and engaging. Furthermore, we’re also bringing **MCP (Model Control Protocol)** integration to MLZero, enabling seamless remote orchestration of AutoML pipelines through a standardized protocol。

AutoGluon Assistant is supported on Python 3.8 - 3.11 and is available on Linux.

Installation:

```bash

pip install uv

uv pip install autogluon.assistant>=1.0

```

To use CLI:

```bash

mlzero -i

```

To use webUI:

```bash

mlzero-backend # command to start backend

mlzero-frontend # command to start frontend on 8509 (default)

```

To use MCP:

```bash

# server

mlzero-backend # command to start backend

bash ./src/autogluon/mcp/server/start_services.sh # This will start the service—run it in a new terminal.

# client

python ./src/autogluon/mcp/client/server.py

```

MLZero Authors: [Haoyang Fang](https://github.com/FANGAreNotGnu), [Boran Han](https://github.com/boranhan), [Steven Shen](https://github.com/HuawenShen), [Nick Erickson](https://github.com/Innixma), [Xiyuan Zhang](https://xiyuanzh.github.io/), [Su Zhou](https://github.com/suzhoum), [Anirudh Dagar](https://github.com/AnirudhDagar), [Jiani Zhang](https://jennyzhang0215.github.io/), [Ali Caner Turkmen](https://github.com/canerturkmen), [Cuixiong Hu](https://github.com/tonyhoo), [Huzefa Rangwala](https://cs.gmu.edu/~hrangwal/), [Ying Nian Wu](https://scholar.google.com/citations?user=7k_1QFIAAAAJ&hl=en), [Bernie Wang](https://www.mit.edu/~ywang02/), [George Karypis](https://karypis.github.io/)

### Mitra

🚀 [Mitra](https://huggingface.co/autogluon/mitra-classifier) is a new state-of-the-art tabular foundation model developed by the AutoGluon team, natively supported in AutoGluon with just **three lines of code** via `predictor.fit(train_data, hyperparameters={"MITRA": {}})`. Built on the in-context learning paradigm and **pretrained exclusively on synthetic data**, Mitra introduces a principled pretraining approach by carefully selecting and mixing diverse synthetic priors to promote robust generalization across a wide range of real-world tabular datasets. Mitra is incorporated into the new `extreme` preset.

📊 Mitra achieves **state of the art performance** on major benchmarks including TabRepo, TabZilla, AMLB, and TabArena, especially excelling on small tabular datasets with fewer than 5,000 samples and 100 features, for both **classification** and **regression** tasks.

🧠 Mitra supports both **zero-shot** and **fine-tuning** modes and runs seamlessly on both **GPU** and **CPU**. Its weights are fully open-sourced under the Apache-2.0 license, making it a privacy-conscious and production-ready solution for enterprises concerned about data sharing and hosting.

🔗 Learn more by reading the [Mitra release blog post](https://www.amazon.science/blog/mitra-mixed-synthetic-priors-for-enhancing-tabular-foundation-models) and on HuggingFace:

* Classification model: [autogluon/mitra-classifier](https://huggingface.co/autogluon/mitra-classifier)

* Regression model: [autogluon/mitra-regressor](https://huggingface.co/autogluon/mitra-regressor)

We welcome community feedback for future iterations. Give us a like on HuggingFace if you want to see more cutting-edge foundation models for structured data!

Mitra Authors: [Xiyuan Zhang](https://xiyuanzh.github.io/), [Danielle Robinson](https://dcmaddix.github.io/), [Junming Yin](https://github.com/junmingy), [Nick Erickson](https://github.com/Innixma), [Abdul Fatir Ansari](https://github.com/abdulfatir), [Boran Han](https://github.com/boranhan), [Shuai Zhang](https://github.com/cheungdaven), [Leman Akoglu](https://scholar.google.com/citations?user=4ITkr_kAAAAJ&hl=en), [Christos Faloutsos](https://www.cs.cmu.edu/~christos/), [Michael W. Mahoney](https://www.stat.berkeley.edu/~mmahoney/), [Cuixiong Hu](https://github.com/tonyhoo), [Huzefa Rangwala](https://cs.gmu.edu/~hrangwal/), [George Karypis](https://karypis.github.io/), [Bernie Wang](https://www.mit.edu/~ywang02/)

--------

## General

- Add CPU utility functions for better CPU detection in restrained env such as docker and slurm cluster. [@tonyhoo](https://github.com/tonyhoo) ([#5197](https://github.com/autogluon/autogluon/pull/5197))

- Use joblib instead of loky for cpu detection. [@shchur](https://github.com/shchur) ([#5215](https://github.com/autogluon/autogluon/pull/5215))

- Support Apple Silicon and log it in the system info. [@tonyhoo](https://github.com/tonyhoo) ([#5141](https://github.com/autogluon/autogluon/pull/5141))

- Add load pickle from url support, fix save_str if root path. [@Innixma](https://github.com/Innixma) ([#5142](https://github.com/autogluon/autogluon/pull/5142))

- Use pyarrow by default, remove fastparquet. [@Innixma](https://github.com/Innixma) ([#5150](https://github.com/autogluon/autogluon/pull/5150))

- Resolve AttributeError in LinearModel when using RAPIDS cuML models. [@tonyhoo](https://github.com/tonyhoo) ([#5157](https://github.com/autogluon/autogluon/pull/5157))

- add kwargs option to upload_file. [@Innixma](https://github.com/Innixma) ([#5161](https://github.com/autogluon/autogluon/pull/5161))

- prioritize the CUDA libraries from PyTorch wheel instead of the system/DLC. [@FireballDWF](https://github.com/FireballDWF) ([#5163](https://github.com/autogluon/autogluon/pull/5163))

- update numpy cap, thus 2.3.0 is allowed. [@FireballDWF](https://github.com/FireballDWF) ([#5170](https://github.com/autogluon/autogluon/pull/5170))

- Replace pkg_resources.parse_version with packaging.version.parse. [@shchur](https://github.com/shchur) ([#5182](https://github.com/autogluon/autogluon/pull/5182))

- Update pandas, scikit-learn, and scipy version caps in setup utils. [@tonyhoo](https://github.com/tonyhoo) ([#5194](https://github.com/autogluon/autogluon/pull/5194))

- Enhance spunge_augment and munge_augment functions for model distillation. [@tonyhoo](https://github.com/tonyhoo) ([#5208](https://github.com/autogluon/autogluon/pull/5208))

- Spunge Augmentation Speed-Up. [@mwhol](https://github.com/mwhol) ([#5217](https://github.com/autogluon/autogluon/pull/5217))

- Increase pytorch cap to 2.8 to enable 2.7. [@FireballDWF](https://github.com/FireballDWF) ([#5089](https://github.com/autogluon/autogluon/pull/5089))

- Resolve datetime deprecation warnings. [@emmanuel-ferdman](https://github.com/emmanuel-ferdman) ([#5069](https://github.com/autogluon/autogluon/pull/5069))

--------

## Tabular

### New Presets

- Add [extreme preset](https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets) with meta-learned [TabArena](https://tabarena.ai) portfolio. [@Innixma](https://github.com/Innixma) ([#5211](https://github.com/autogluon/autogluon/pull/5211))

- The `extreme` preset is **the largest singular improvement to AutoGluon's predictive performance in the history of the package**, even larger than the improvement seen in AutoGluon 1.0 compared to 0.8.

- For more information, refer to the highlights section above.

### New Models

#### Mitra

- Add [Mitra](https://auto.gluon.ai/stable/api/autogluon.tabular.models.html#autogluon.tabular.models.MitraModel) Model (key: `"MITRA"`). [@xiyuanzh](https://github.com/xiyuanzh), [@dcmaddix](https://github.com/dcmaddix), [@junmingy](https://github.com/junmingy), [@Innixma](https://github.com/Innixma), [@tonyhoo](https://github.com/tonyhoo) ([#5195](https://github.com/autogluon/autogluon/pull/5195), [#5218](https://github.com/autogluon/autogluon/pull/5218), [#5232](https://github.com/autogluon/autogluon/pull/5232), [#5221](https://github.com/autogluon/autogluon/pull/5221))

- Install via `pip install autogluon.tabular[all,mitra]` (and natively in `pip install autogluon`)

- Blog Post: [Mitra: Mixed synthetic priors for enhancing tabular foundation models](https://www.amazon.science/blog/mitra-mixed-synthetic-priors-for-enhancing-tabular-foundation-models)

- [Usage Tutorial](https://auto.gluon.ai/dev/tutorials/tabular/tabular-foundational-models.html)

#### TabPFNv2

- Add [TabPFNv2](https://auto.gluon.ai/stable/api/autogluon.tabular.models.html#autogluon.tabular.models.TabPFNV2Model) Model (key: `"TABPFNV2"`). [@LennartPurucker](https://github.com/LennartPurucker), [@Innixma](https://github.com/Innixma) ([#5191](https://github.com/autogluon/autogluon/pull/5191))

- Install via `pip install autogluon.tabular[all,tabpfn]` (or `pip install autogluon[tabarena]`)

- Paper: [Accurate predictions on small data with a tabular foundation model](https://www.nature.com/articles/s41586-024-08328-6)

- [Usage Tutorial](https://auto.gluon.ai/dev/tutorials/tabular/tabular-foundational-models.html)

#### TabICL

- Add [TabICL](https://auto.gluon.ai/stable/api/autogluon.tabular.models.html#autogluon.tabular.models.TabICLModel) Model (key: `"TABICL"`). [@LennartPurucker](https://github.com/LennartPurucker), [@Innixma](https://github.com/Innixma) ([#5193](https://github.com/autogluon/autogluon/pull/5193))

- Install via `pip install autogluon.tabular[all,tabicl]` (or `pip install autogluon[tabarena]`)

- Paper: [TabICL: A Tabular Foundation Model for In-Context Learning on Large Data](https://arxiv.org/abs/2502.05564)

- [Usage Tutorial](https://auto.gluon.ai/dev/tutorials/tabular/tabular-foundational-models.html)

#### RealMLP

- Add [RealMLP](https://auto.gluon.ai/stable/api/autogluon.tabular.models.html#autogluon.tabular.models.RealMLPModel) Model (key: `"REALMLP"`). [@dholzmueller](https://github.com/dholzmueller), [@Innixma](https://github.com/Innixma), [@LennartPurucker](https://github.com/LennartPurucker) ([#5190](https://github.com/autogluon/autogluon/pull/5190))-

- Install via `pip install autogluon.tabular[all,realmlp]` (or `pip install autogluon[tabarena]`)

- Paper: [Better by Default: Strong Pre-Tuned MLPs and Boosted Trees on Tabular Data](https://arxiv.org/abs/2407.04491)

#### TabM

- Add [TabM](https://auto.gluon.ai/stable/api/autogluon.tabular.models.html#autogluon.tabular.models.TabMModel) Model (key: `"TABM"`). [@LennartPurucker](https://github.com/LennartPurucker), [@dholzmueller](https://github.com/dholzmueller), [@Innixma](https://github.com/Innixma) ([#5196](https://github.com/autogluon/autogluon/pull/5196))

- Install via `pip install autogluon.tabular[all,tabm]` (and natively in `pip install autogluon`)

- Paper: [TabM: Advancing Tabular Deep Learning with Parameter-Efficient Ensembling](https://arxiv.org/abs/2410.24210)

#### Removals

- Removed TabPFNv1 model, as it is incompatible with TabPFNv2. [@Innixma](https://github.com/Innixma) ([#5191](https://github.com/autogluon/autogluon/pull/5191))

- Removed KNN model from `best_quality` and `high_quality` preset portfolios, as it did not generally improve results. [@Innixma](https://github.com/Innixma) ([#5211](https://github.com/autogluon/autogluon/pull/5211))

### Fixes and Improvements

- Respect num_cpus/num_gpus in sequential_local fit. [@Innixma](https://github.com/Innixma) ([#5203](https://github.com/autogluon/autogluon/pull/5203))

- Switch to loky for get_cpu_count in all places. [@Innixma](https://github.com/Innixma) ([#5204](https://github.com/autogluon/autogluon/pull/5204))

- Add support for max_rows, max_features, max_classes, problem_types. [@Innixma](https://github.com/Innixma) ([#5181](https://github.com/autogluon/autogluon/pull/5181))

- Add ag.ens. shortcut for ag_args_ensemble. [@Innixma](https://github.com/Innixma) ([#5143](https://github.com/autogluon/autogluon/pull/5143))

- Fix CatBoost crashing for problem_type="quantile" if len(quantile_levels) == 1. [@shchur](https://github.com/shchur) ([#5201](https://github.com/autogluon/autogluon/pull/5201))

- Add tabular foundational model cache from s3 to benchmark to avoid rate limit issue from HF. [@tonyhoo](https://github.com/tonyhoo) ([#5214](https://github.com/autogluon/autogluon/pull/5214))

- Fix default loss_function for CatBoostModel with problem_type='regression'. [@shchur](https://github.com/shchur) ([#5216](https://github.com/autogluon/autogluon/pull/5216))

- Remove fobj in Softclass. [@rsj123](https://github.com/rsj123) ([#5219](https://github.com/autogluon/autogluon/pull/5219))

- Minor enhancements and fixes. [@adibiasio](https://github.com/adibiasio), [@Innixma](https://github.com/Innixma) ([#5158](https://github.com/autogluon/autogluon/pull/5158))

--------

## TimeSeries

### Highlights

- Major [efficiency improvements](https://github.com/autogluon/autogluon/pull/5159) to the core `TimeSeriesDataFrame` methods, resulting in up to 7x lower end-to-end `predictor.fit()` and `predict()` time when working with large datasets (>10M rows).

- New tabular forecasting model [`PerStepTabular`](https://auto.gluon.ai/stable/tutorials/timeseries/forecasting-model-zoo.html#autogluon.timeseries.models.PerStepTabularModel) that fits a separate tabular regression model for each time step in the forecast horizon. Both fitting and inference for the model are parallelized across cores, resulting in one of the most efficient and accurate implementations of this model among open-source Python packages.

### API Changes and Deprecations

- `DirectTabular` and `RecursiveTabular` models: hyperparameters `tabular_hyperparameters` and `tabular_fit_kwargs` are now deprecated in favor of `model_name` and `model_hyperparameters`.

These models now fit a single regression model from `autogluon.tabular` under the hood instead of creating an entire `TabularPredictor`. This results in lower disk usage and API better aligned with the rest of the `timeseries` module.

Details and example usage

```python

# New API: >= v1.4.0

predictor.fit(

...,

hyperparameters={

"RecursiveTabular": {"model_name": "CAT", "model_hyperparameters": {"iterations": 100}}

}

)

# Old API: <= v1.3.1

predictor.fit(

...,

hyperparameters={

"RecursiveTabular": {"tabular_hyperparameters": {"CAT": {"iterations": 100}}}

}

)

```

If you provide `tabular_hyperparameters` with a single model in v1.4.0, a warning will be logged and the parameter will be automatically converted to match the new API.

If you provide `tabular_hyperparameters` with >=2 models in v1.4.0, an error will be raised since it cannot automatically be converted to the new API.

- `Chronos` model: Hyperparameter `optimization_strategy` (deprecated in v1.3.0) has been removed in v1.4.0.

### New Features

- Add `PerStepTabular` model that fits a separate tabular regression model for each step in the forecast horizon. [@shchur](https://github.com/shchur) ([#5189](https://github.com/autogluon/autogluon/pull/5189), [#5213](https://github.com/autogluon/autogluon/pull/5213))

- Improve heuristic for long-term forecast unrolling (`prediction_length > 64`) for Chronos-Bolt. [@abdulfatir](https://github.com/abdulfatir) ([#5177](https://github.com/autogluon/autogluon/pull/5177))

- `RecursiveTabular` model now supports the `lag_transforms` hyperparameter. [@shchur](https://github.com/shchur) ([#5184](https://github.com/autogluon/autogluon/pull/5184))

### Fixes and Improvements

- Improve the runtime of various `TimeSeriesDataFrame` operations by replacing `groupby` with efficient alternatives based on `indptr`. [@shchur](https://github.com/shchur) ([#5159](https://github.com/autogluon/autogluon/pull/5159))

- Refactor `DirectTabular` and `RecursiveTabular` models to use a single tabular model under the hood instead of a `TabularPredictor`. ([#5212](https://github.com/autogluon/autogluon/pull/5212))

- Reorganize `autogluon.timeseries.models.gluonts` namespace. [@canerturkmen](https://github.com/canerturkmen) ([#5104](https://github.com/autogluon/autogluon/pull/5104))

- Log the full stack trace in case of individual model failures during training. [@shchur](https://github.com/shchur) ([#5178](https://github.com/autogluon/autogluon/pull/5178))

- Deprecate the `optimization_strategy` hyperparameter for the Chronos (classic) model. [@shchur](https://github.com/shchur) ([#5202](https://github.com/autogluon/autogluon/pull/5202))

- Fix incompatibility with python 3.9. [@prateekdesai04](https://github.com/prateekdesai04) ([#5220](https://github.com/autogluon/autogluon/pull/5220))

- Refactor the implementation of `RecursiveTabular` and `DirectTabular` models. [@shchur](https://github.com/shchur) ([#5184](https://github.com/autogluon/autogluon/pull/5184), [#5206](https://github.com/autogluon/autogluon/pull/5206))

- Fix typos and layout issues in the documentation. [@shchur](https://github.com/shchur) ([#5225](https://github.com/autogluon/autogluon/pull/5225))

- Fix refit_full failing during ensemble prediction if quantile_levels=[]. [@shchur](https://github.com/shchur) ([#5242](https://github.com/autogluon/autogluon/pull/5242))

--------

## Multimodal

- Change multilingual preset to use FP32 to avoid DeBERTa BFloat16. [@tonyhoo](https://github.com/tonyhoo) ([#5139](https://github.com/autogluon/autogluon/pull/5139))

- Update NLTK dependency constraint to <3.10 to address CVE-2024-39705. [@tonyhoo](https://github.com/tonyhoo) ([#5147](https://github.com/autogluon/autogluon/pull/5147))

--------

## Documentation and CI

- Fix Python syntax in CUDA library path detection. [@tonyhoo](https://github.com/tonyhoo) ([#5166](https://github.com/autogluon/autogluon/pull/5166))

- Upgrade image to use torch 2.7.1. [@tonyhoo](https://github.com/tonyhoo) ([#5168](https://github.com/autogluon/autogluon/pull/5168))

- Show tabular model aliases in the documentation. [@shchur](https://github.com/shchur) ([#5183](https://github.com/autogluon/autogluon/pull/5183))

- Fix lint check. [@prateekdesai04](https://github.com/prateekdesai04) ([#5192](https://github.com/autogluon/autogluon/pull/5192))

- Add time limit conversion to seconds in benchmark config script. [@tonyhoo](https://github.com/tonyhoo) ([#5224](https://github.com/autogluon/autogluon/pull/5224))

--------

## Special Thanks

- [Lennart Purucker](https://github.com/LennartPurucker) and [David Holzmüller](https://github.com/dholzmueller) for helping to implement TabPFNv2, TabICL, RealMLP and TabM, along with providing improved memory estimate logic for the models.

- [Steven Shen](https://github.com/HuawenShen) for implementing the front-end web UI and MCP backend for MLZero.

- [Atharva Rajan Kale](https://github.com/Atharva-Rajan-Kale) for helping to automate and streamline our DLC release process.

## Contributors

Full Contributor List (ordered by # of commits):

[@shchur](https://github.com/shchur) [@Innixma](https://github.com/Innixma) [@tonyhoo](https://github.com/tonyhoo) [@prateekdesai04](https://github.com/prateekdesai04) [@FireballDWF](https://github.com/FireballDWF) [@canerturkmen](https://github.com/canerturkmen) [@abdulfatir](https://github.com/abdulfatir) [@rsj123](https://github.com/rsj123) [@xiyuanzh](https://github.com/xiyuanzh) [@mwhol](https://github.com/mwhol) [@daradib](https://github.com/daradib) [@emmanuel-ferdman](https://github.com/emmanuel-ferdman) [@adibiasio](https://github.com/adibiasio) [@LennartPurucker](https://github.com/LennartPurucker) [@dholzmueller](https://github.com/dholzmueller)

### New Contributors

- [@mwhol](https://github.com/mwhol) made their first contribution in [#5217](https://github.com/autogluon/autogluon/pull/5217)

- [@daradib](https://github.com/daradib) made their first contribution in [#5231](https://github.com/autogluon/autogluon/pull/5231)

- [@emmanuel-ferdman](https://github.com/emmanuel-ferdman) made their first contribution in [#5069](https://github.com/autogluon/autogluon/pull/5069)

AutoGluon 1.4.0 introduces a new tabular preset, `extreme_quality` aka `extreme`.

AutoGluon's [extreme preset](https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets) is **the largest singular improvement to AutoGluon's predictive performance in the history of the package**, even larger than the improvement seen in AutoGluon 1.0 compared to 0.8.

This preset achieves an **88% win-rate** vs Autogluon 1.3 `best_quality` for datasets with fewer than 10000 samples, and a 290 Elo improvement overall on [TabArena](https://tabarena.ai) (shown in the figure above).

Try it out in 3 lines of code:

```python

from autogluon.tabular import TabularPredictor

predictor = TabularPredictor(label="class").fit("train.csv", presets="extreme")

predictions = predictor.predict("test.csv")

```

The `extreme` preset leverages a [new model portfolio](https://github.com/autogluon/autogluon/blob/master/tabular/src/autogluon/tabular/configs/zeroshot/zeroshot_portfolio_2025.py), which is an improved version of the `TabArena ensemble` shown in Figure 6a of the [TabArena paper](https://arxiv.org/abs/2506.16791).

It consists of many new model families added in this release: TabPFNv2, TabICL, Mitra, TabM, as well as tree methods: CatBoost, LightGBM, XGBoost.

This preset is not only more accurate, it also requires much less training time. AutoGluon's `extreme` preset in 5 minutes is able to outperform `best` ran for 4 hours.

In order to get the most out of the `extreme` preset, a CUDA compatible GPU is required, ideally with 32+ GB vRAM.

Note that inference time can be longer than `best`, but with a GPU it is very reasonable.

The `extreme` portfolio is only leveraged for datasets with at most 30000 samples. For larger datasets, we continue to use the `best_quality` portfolio.

The preset requires downloading foundation model weights for TabPFNv2, TabICL, and Mitra during fit. If you don't have an internet connection,

ensure that you pre-download the weights of the models to be able to use them during fit.

This preset is considered experimental for this release, and may change without warning in a future release.

### TabArena and new models: TabPFNv2, TabICL, TabM, RealMLP

🚨What is SOTA on tabular data, really? We are excited to introduce [TabArena](https://tabarena.ai), a living benchmark for machine learning on IID tabular data with:

📊 an online leaderboard accepting submissions

📑 carefully curated datasets (real, predictive, tabular, IID, permissive license)

📈 strong tree-based, deep learning, and foundation models

⚙️ best practices for evaluation (inner CV, outer CV, early stopping)

ℹ️ 𝐎𝐯𝐞𝐫𝐯𝐢𝐞𝐰

Leaderboard: https://tabarena.ai

Paper: https://arxiv.org/abs/2506.16791

Code: https://tabarena.ai/code

💡 𝐌𝐚𝐢𝐧 𝐢𝐧𝐬𝐢𝐠𝐡𝐭𝐬:

➡️ Recent deep learning models, RealMLP and TabM, have marginally overtaken boosted trees with weighted ensembling, although they have slower train+inference times. With defaults or regular tuning, CatBoost takes the #1 spot.

➡️ Foundation models TabPFNv2 and TabICL are only applicable to a subset of datasets, but perform very strongly on these. They have a large inference time and still need tuning/ensembling to get the top spot (for TabPFNv2).

➡️ The winner does NOT take it all. By using a weighted ensemble of different model types from TabArena, we can significantly outperform the current state of the art on tabular data, AutoGluon 1.3.

➡️ These insights have been directly incorporated into the AutoGluon 1.4 release with the [extreme preset](https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets), dramatically advancing the state of the art!

➡️ The models TabPFNv2, TabICL, TabM, and RealMLP have been added to AutoGluon! To use them, run `pip install autogluon[tabarena]` and use the `extreme` preset.

🎯TabArena is a living benchmark. With the community, we will continually update it!

TabArena Authors: [Nick Erickson](https://github.com/Innixma), [Lennart Purucker](https://github.com/LennartPurucker), [Andrej Tschalzev](https://github.com/atschalz), [David Holzmüller](https://github.com/dholzmueller), [Prateek Mutalik Desai](https://github.com/prateekdesai04), [David Salinas](https://github.com/geoalgo), [Frank Hutter](https://github.com/frank-hutter)

### AutoGluon Assistant (MLZero)

> *Multi-Agent System Powered by LLMs for End-to-end Multimodal ML Automation*

We are excited to present the [AutoGluon Assistant](https://github.com/autogluon/autogluon-assistant) 1.0 release. Level up from v0.1: v1.0 expands beyond tabular data to robustly support any and many modalities, including **image, text, tabular, audio and mixed-data pipelines**. This aligns precisely with the MLZero vision of comprehensive, modality-agnostic ML automation.

AutoGluon Assistant v1.0 is now synonymous with **"MLZero: A Multi-Agent System for End-to-end Machine Learning Automation"** ([arXiv:2505.13941](https://arxiv.org/abs/2505.13941)), the end-to-end, zero-human-intervention AutoML agent framework for multimodal data. Built on a novel **multi-agent architecture** using LLMs, MLZero handles perception, memory (semantic & episodic), code generation, execution, and iterative debugging — seamlessly transforming raw multimodal inputs into high-quality ML/DL pipelines.

- **No-code**: Users define tasks purely through natural language ("classify images of cats vs dogs with custom labels"), and MLZero delivers complete solutions with zero manual configuration or technical expertise required.

- **Built on proven foundations**: MLZero generates code using established, high-performance ML libraries rather than reinventing the wheel, ensuring robust solutions while maintaining the flexibility to easily integrate new libraries as they emerge.

- **Research-grade performance**: MLZero is extensively validated across 25 challenging tasks spanning diverse data modalities, MLZero outperforms the competing methods by a large margin with a success rate of 0.92 (+263.6\%) and an average rank of 2.42.

AutoGluon 1.4.0 introduces a new tabular preset, `extreme_quality` aka `extreme`.

AutoGluon's [extreme preset](https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets) is **the largest singular improvement to AutoGluon's predictive performance in the history of the package**, even larger than the improvement seen in AutoGluon 1.0 compared to 0.8.

This preset achieves an **88% win-rate** vs Autogluon 1.3 `best_quality` for datasets with fewer than 10000 samples, and a 290 Elo improvement overall on [TabArena](https://tabarena.ai) (shown in the figure above).

Try it out in 3 lines of code:

```python

from autogluon.tabular import TabularPredictor

predictor = TabularPredictor(label="class").fit("train.csv", presets="extreme")

predictions = predictor.predict("test.csv")

```

The `extreme` preset leverages a [new model portfolio](https://github.com/autogluon/autogluon/blob/master/tabular/src/autogluon/tabular/configs/zeroshot/zeroshot_portfolio_2025.py), which is an improved version of the `TabArena ensemble` shown in Figure 6a of the [TabArena paper](https://arxiv.org/abs/2506.16791).

It consists of many new model families added in this release: TabPFNv2, TabICL, Mitra, TabM, as well as tree methods: CatBoost, LightGBM, XGBoost.

This preset is not only more accurate, it also requires much less training time. AutoGluon's `extreme` preset in 5 minutes is able to outperform `best` ran for 4 hours.

In order to get the most out of the `extreme` preset, a CUDA compatible GPU is required, ideally with 32+ GB vRAM.

Note that inference time can be longer than `best`, but with a GPU it is very reasonable.

The `extreme` portfolio is only leveraged for datasets with at most 30000 samples. For larger datasets, we continue to use the `best_quality` portfolio.

The preset requires downloading foundation model weights for TabPFNv2, TabICL, and Mitra during fit. If you don't have an internet connection,

ensure that you pre-download the weights of the models to be able to use them during fit.

This preset is considered experimental for this release, and may change without warning in a future release.

### TabArena and new models: TabPFNv2, TabICL, TabM, RealMLP

🚨What is SOTA on tabular data, really? We are excited to introduce [TabArena](https://tabarena.ai), a living benchmark for machine learning on IID tabular data with:

📊 an online leaderboard accepting submissions

📑 carefully curated datasets (real, predictive, tabular, IID, permissive license)

📈 strong tree-based, deep learning, and foundation models

⚙️ best practices for evaluation (inner CV, outer CV, early stopping)

ℹ️ 𝐎𝐯𝐞𝐫𝐯𝐢𝐞𝐰

Leaderboard: https://tabarena.ai

Paper: https://arxiv.org/abs/2506.16791

Code: https://tabarena.ai/code

💡 𝐌𝐚𝐢𝐧 𝐢𝐧𝐬𝐢𝐠𝐡𝐭𝐬:

➡️ Recent deep learning models, RealMLP and TabM, have marginally overtaken boosted trees with weighted ensembling, although they have slower train+inference times. With defaults or regular tuning, CatBoost takes the #1 spot.

➡️ Foundation models TabPFNv2 and TabICL are only applicable to a subset of datasets, but perform very strongly on these. They have a large inference time and still need tuning/ensembling to get the top spot (for TabPFNv2).

➡️ The winner does NOT take it all. By using a weighted ensemble of different model types from TabArena, we can significantly outperform the current state of the art on tabular data, AutoGluon 1.3.

➡️ These insights have been directly incorporated into the AutoGluon 1.4 release with the [extreme preset](https://auto.gluon.ai/stable/tutorials/tabular/tabular-essentials.html#presets), dramatically advancing the state of the art!

➡️ The models TabPFNv2, TabICL, TabM, and RealMLP have been added to AutoGluon! To use them, run `pip install autogluon[tabarena]` and use the `extreme` preset.

🎯TabArena is a living benchmark. With the community, we will continually update it!

TabArena Authors: [Nick Erickson](https://github.com/Innixma), [Lennart Purucker](https://github.com/LennartPurucker), [Andrej Tschalzev](https://github.com/atschalz), [David Holzmüller](https://github.com/dholzmueller), [Prateek Mutalik Desai](https://github.com/prateekdesai04), [David Salinas](https://github.com/geoalgo), [Frank Hutter](https://github.com/frank-hutter)

### AutoGluon Assistant (MLZero)

> *Multi-Agent System Powered by LLMs for End-to-end Multimodal ML Automation*

We are excited to present the [AutoGluon Assistant](https://github.com/autogluon/autogluon-assistant) 1.0 release. Level up from v0.1: v1.0 expands beyond tabular data to robustly support any and many modalities, including **image, text, tabular, audio and mixed-data pipelines**. This aligns precisely with the MLZero vision of comprehensive, modality-agnostic ML automation.

AutoGluon Assistant v1.0 is now synonymous with **"MLZero: A Multi-Agent System for End-to-end Machine Learning Automation"** ([arXiv:2505.13941](https://arxiv.org/abs/2505.13941)), the end-to-end, zero-human-intervention AutoML agent framework for multimodal data. Built on a novel **multi-agent architecture** using LLMs, MLZero handles perception, memory (semantic & episodic), code generation, execution, and iterative debugging — seamlessly transforming raw multimodal inputs into high-quality ML/DL pipelines.

- **No-code**: Users define tasks purely through natural language ("classify images of cats vs dogs with custom labels"), and MLZero delivers complete solutions with zero manual configuration or technical expertise required.

- **Built on proven foundations**: MLZero generates code using established, high-performance ML libraries rather than reinventing the wheel, ensuring robust solutions while maintaining the flexibility to easily integrate new libraries as they emerge.

- **Research-grade performance**: MLZero is extensively validated across 25 challenging tasks spanning diverse data modalities, MLZero outperforms the competing methods by a large margin with a success rate of 0.92 (+263.6\%) and an average rank of 2.42.